-

veeeammeupscotty

- Enthusiast

- Posts: 33

- Liked: 2 times

- Joined: May 05, 2017 3:06 pm

- Full Name: JP

- Contact:

Replication Woes

I'm trying to replicate some large VMs (12TB of data) over a WAN link (50Mbps sym). Change rates are fairly low, but the digest calculation is just taking too long. Working with Veeam support (02133986), I'm doing all I can to make the calculation go faster (proxy's at each site, sufficient compute resources). This wouldn't be such an issue, but regular VM backups cannot run while the replication job is running. It sounds like the only way around this would be to copy the backup files to a secondary repository and use that as the source for replication. This isn't ideal for obvious reasons. Any other ideas? At this point I'm going to investigate other replication options to see if they might work better (vSphere replication and SAN to SAN).

-

foggy

- Veeam Software

- Posts: 21208

- Liked: 2179 times

- Joined: Jul 11, 2011 10:22 am

- Full Name: Alexander Fogelson

- Contact:

Re: Replication Woes

Are you saying digests are re-calculated during each replication job run? This shouldn't be the case.

-

veeeammeupscotty

- Enthusiast

- Posts: 33

- Liked: 2 times

- Joined: May 05, 2017 3:06 pm

- Full Name: JP

- Contact:

Re: Replication Woes

Well that was part of the issue, that it was re-calculating digests after the initial seed without any changes or failures, but since then I had the replication job fail (due to another issue) and haven't attempted again because I don't want to be without regular backups for the 60+ hours it took last time.

-

foggy

- Veeam Software

- Posts: 21208

- Liked: 2179 times

- Joined: Jul 11, 2011 10:22 am

- Full Name: Alexander Fogelson

- Contact:

Re: Replication Woes

Job failure can be the reason for digest recalculation, indeed. This process requires that the target-side proxy read the entire replica disk and may take considerable time for large VMs as its speed depends on the size of the data. You could expect a digests calculation to take as long as an active full backup of the VM to some fast repository. Anyway, you need to let it complete so that it doesn't happen during subsequent job runs. Make sure the correct proxies are selected and the expected transport mode is used. Or just re-seed the entire replica.

-

veeeammeupscotty

- Enthusiast

- Posts: 33

- Liked: 2 times

- Joined: May 05, 2017 3:06 pm

- Full Name: JP

- Contact:

Re: Replication Woes

It's interesting that you say the digests could take as long as an active full backup, because it was taking at least twice as long (storage infrastructure is identical at each location). To be clear, the primary issue here isn't the fact that this is taking so long (though it would be great to improve this); it's that the regular backup job of the VM cannot run while replication is ongoing. I still don't understand why this is the case if I select the backup repository to be the replication source instead of the VM itself.

-

foggy

- Veeam Software

- Posts: 21208

- Liked: 2179 times

- Joined: Jul 11, 2011 10:22 am

- Full Name: Alexander Fogelson

- Contact:

Re: Replication Woes

That's in fact unexpected as well, since backup job has a higher priority and should interrupt the replication going from this chain.

-

veeeammeupscotty

- Enthusiast

- Posts: 33

- Liked: 2 times

- Joined: May 05, 2017 3:06 pm

- Full Name: JP

- Contact:

Re: Replication Woes

When you say interrupted, do you mean the replication job will fail or just be put on hold until the backup is complete? I believe the backup job waits saying "waiting for resources" or something.

-

foggy

- Veeam Software

- Posts: 21208

- Liked: 2179 times

- Joined: Jul 11, 2011 10:22 am

- Full Name: Alexander Fogelson

- Contact:

Re: Replication Woes

The job will fail but the missing blocks will be transferred during the next job run, so finally the restore point will be repaired.

-

veeeammeupscotty

- Enthusiast

- Posts: 33

- Liked: 2 times

- Joined: May 05, 2017 3:06 pm

- Full Name: JP

- Contact:

Re: Replication Woes

Can you explain the technical reason why the replication job will fail in this scenario? Unless the backup job is doing a transform operation it shouldn't need to modify the backup file from which replication is occurring so there shouldn't be a locking issue.

-

skrause

- Veteran

- Posts: 487

- Liked: 107 times

- Joined: Dec 08, 2014 2:58 pm

- Full Name: Steve Krause

- Contact:

Re: Replication Woes

In general, Veeam will only allow one job to work with the files at any given time to avoid potential conflicts/data corruption.

Steve Krause

Veeam Certified Architect

Veeam Certified Architect

-

veeeammeupscotty

- Enthusiast

- Posts: 33

- Liked: 2 times

- Joined: May 05, 2017 3:06 pm

- Full Name: JP

- Contact:

Re: Replication Woes

When the job fails in this way, will it have to re-calculate digests for the entire disk?foggy wrote:The job will fail but the missing blocks will be transferred during the next job run, so finally the restore point will be repaired.

-

foggy

- Veeam Software

- Posts: 21208

- Liked: 2179 times

- Joined: Jul 11, 2011 10:22 am

- Full Name: Alexander Fogelson

- Contact:

Re: Replication Woes

No, I believe it should not in this case.

-

veeeammeupscotty

- Enthusiast

- Posts: 33

- Liked: 2 times

- Joined: May 05, 2017 3:06 pm

- Full Name: JP

- Contact:

Re: Replication Woes

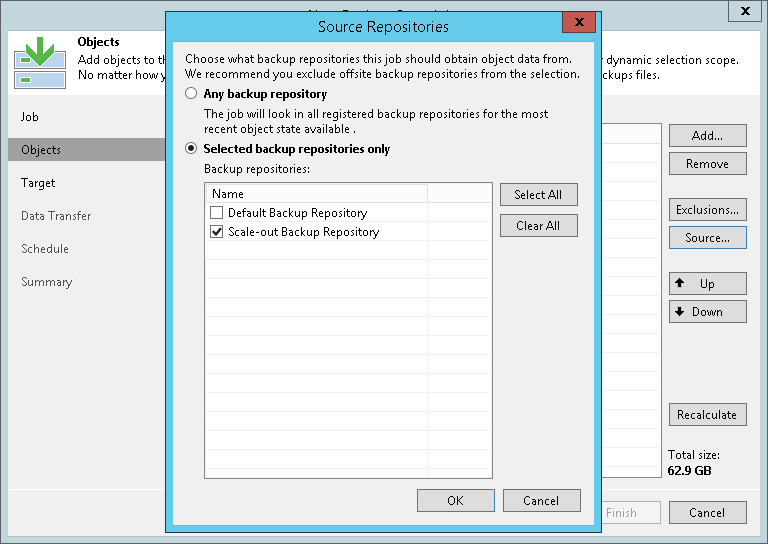

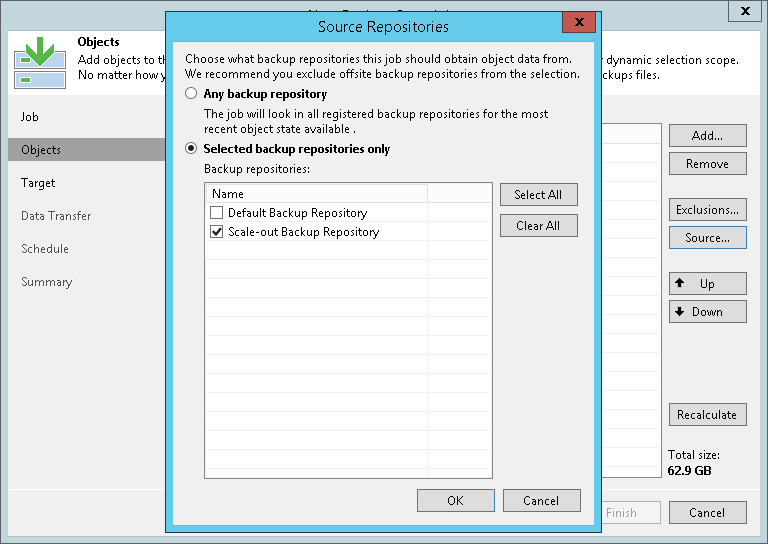

I think I figured out part of the problem. The backup repository has a default maximum of 4 concurrent tasks. Since the same repository is being used for both backup and replication, 4 VMs replicating at a time will cause a resource not ready when the backup job runs. So it seems there are two main ways to get around this:

1. Increase concurrent task limit on repository (only if it can be increased greater than the number of VMs in the job)

2. Split the replication job into multiple jobs, one for each VM (since there doesn't seem to be a place to limit on the number of VMs replicating at a time within the job itself).

Neither of these are ideal since the replication job will eventually contain around 100 VMs, so let me know if I'm missing something here. Is there any reason why a concurrent processing limit can only be set on the repository and not the job itself? I think this would be a good feature request.

1. Increase concurrent task limit on repository (only if it can be increased greater than the number of VMs in the job)

2. Split the replication job into multiple jobs, one for each VM (since there doesn't seem to be a place to limit on the number of VMs replicating at a time within the job itself).

Neither of these are ideal since the replication job will eventually contain around 100 VMs, so let me know if I'm missing something here. Is there any reason why a concurrent processing limit can only be set on the repository and not the job itself? I think this would be a good feature request.

-

foggy

- Veeam Software

- Posts: 21208

- Liked: 2179 times

- Joined: Jul 11, 2011 10:22 am

- Full Name: Alexander Fogelson

- Contact:

Re: Replication Woes

As I've mentioned above, backup job has a higher priority than the replica from the same chain, so replication should be terminated regardless of the number of occupied slots. I can see that your support case was closed due to no response from your side, I recommend you contacting support to re-open it and continue investigation.

Who is online

Users browsing this forum: Bing [Bot], Semrush [Bot] and 20 guests