let me elaborate more on this

As we are seeing a strong demand coming from our Veeam customers willing to use a Cloud storage solution for their backup archives, we recently enrolled in the Wasabi MSP Program (after using Veeam Cloud Connect for years).

The biggest challenge they we are currently facing is when the initial upload to the Wasabi S3 bucket consists of several Terabytes. Even though the “upload window” and the bandwidth allotted to Veeam during the night can cope with the average size of the daily incremental uploads, many customers will struggle with an initial upload to the Wasabi S3 bucket consisting of several Terabytes.

We recently contacted Wasabi support in order to have more information about the Wasabi Ball and unfortunately we were told that the Wasabi Ball is only available in the USA (it seems like they expect to have the Wasabi Ball available in Europe in Q4/22). Most importantly, the use of Wasabi Ball with Object lock is not supported (https://wasabi-support.zendesk.com/hc/e ... ject-Lock-) and Immutable backups are what both we and our customers are looking to protect backups against attacks these days.

As a result, I am trying to think outside the box and check to see whether a workaround exists in order to perform the initial upload to the Wasabi S3 bucket using our Gigabit Fiber Optic Internet without putting stress on the customer's network in problematic scenarios.

So far I have come up with the following solution and I would like to confirm whether what I’m planning makes sense in order to go ahead with a pilot customer. The steps below might sound complex and time consuming but I believe they are much easier done than said/described:

==================================================

1. We ship to the customer a properly sized helper Microsoft Windows-based physical server that will be used as a temporary helper backup repository

2. We add the helper server to the customer's Veeam Backup & Replication server (using its name) and then add it as helper Microsoft Windows server backup repository backed by Direct attached storage

3. We add a helper SOBR (I will refer to it as SOBR2 from now on) to the customer's Veeam Backup & Replication server and then choose the helper repository we just created in step 2 above as the Performance Tier

4. We add the Wasabi S3 bucket as an S3 Compatible object storage to the customer's backup infrastructure. While doing this, we select the "Use the following gateway server check box" in order to choose the helper server added in step 2 above as the gateway server towards the Wasabi S3 bucket.

5. We add the Wasabi S3 bucket created in step 4 to SOBR2 as the Capacity Tier extent. While doing this, we configure the time window accordingly in order to initially prohibit any initial copy of data the Capacity Tier.

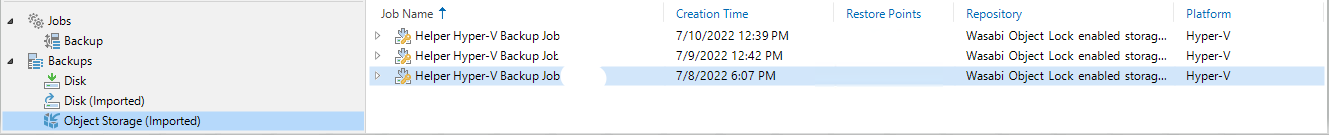

6. We clone an existing Backup or Backup Copy Job (depending on the needs) on the customer's Veeam Backup & Replication server in order to create a helper Job with the same selections . This helper job will target SOBR2.

7. We let the helper job targeting SOBR2 run once and create the backup chain in the Performance Tier added in step 3

8. We ship the helper Microsoft Windows-based physical server back to us.

9. We create a helper VLAN behind our Gigabit Fiber Optic Internet and connect it to the customer's backup infrastructure (for example using Veeam PN)

10. We change the IP address of the helper box on the customer's Veeam Backup & Replication server and make sure that name resolution is working in order for the customer's Veeam Backup & Replication server to reach the helper server on our side of the Veeam PN tunnel.

11. We modify the time window settings on SOBR2 in order to allow the copy of data to the Capacity Tier using our Gigabit Fiber Optic Internet

12. Once the copy of data to the Capacity Tier has completed successfully, we modify the settings of the Wasabi S3 bucket created in step 4 in order not to choose the helper server added in step 2 above as the gateway server towards the Wasabi S3 bucket by unselecting the "Use the following gateway server check box"

13. We delete the helper job targeting SOBR2, SOBR2 itself, the simple helper backup repository, the helper Microsoft Windows server created in step 2 above as well as the Veeam PN connection (basically, we cleanup all the temporary configurations)

14. We go back to the customer's Veeam Backup & Replication server in order to add a new SOBR (I will refer to it as SOBR1 from now on). We then choose the simple repository used as the target for the existing Backup or Backup Copy Job we created a cloned from in step 6 above as the Performance Tier. This Job will be reconfigured in order to target SOBR1

15. We add the Wasabi S3 bucket (already populated with data) created in step 4 to SOBR1 as the Capacity Tier extent and then we configure the time window as well as the other Capacity Tier settings as needed

==================================================

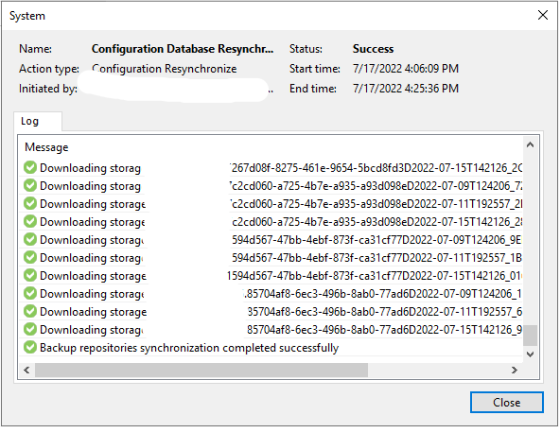

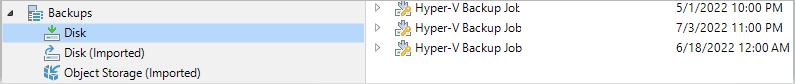

After performing the above steps, I expect Veeam to be able to reuse the objects already stored on the bucket without the need of uploading them again.

I would really appreciate it if you could kindly spend some of your time to confirm whether my plan makes sense.

Wish you a great rest of the day ahead.

Thanks!

Massimiliano