For the future we're considerung the following options:

- New SAN all-flash storage with XFS repos

- Huawei OceanProtect with Dataturbo, Dedupe, etc..

We have roughly around 100TB of source data and a pretty unpredictable changerate, since it's a service provider environement with tenants from all kinds of businesses.

We are preforming daily incemental backup and keep them for 30 days / 52 weeks on disk and also copy them to a second datacenter site.

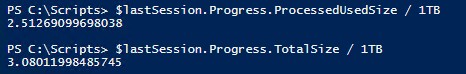

In order to get the right sizing for the OceanProtect, we need to find out our daily and yearly changerate, which due to Blockclone savings, is pretty hard to find out. We do not have VeeamOne.

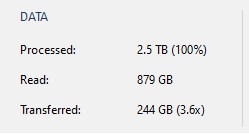

I wrote a scripts which searches for the last successfull run of each backupjob and compares "Processed" with "Read" data and calculates the changerate. To my understanding, "Read" is the value that CBT (Or RCT in our hyper-v case) is reporting as changed data. "Transfered" is the amount ouf data that really is travelling through the network after it has been compressed by the source proxy. Is this correct? Where is per-job dedup happening in Veeam. Do i have to take this into consideration when reading the job session stats or is it happening afterwards?

For the sizing estimation for the new storage, we really need the "RAW" changerate, as OceanProtect is using it's own compression and dedupe mechanisms, in order to achive the best space savings.

My script, which is comparing "Processed" with "Read" amounts, is reporting between 3% and 30% of changerate per day. This results in an average chagerate of 12.6% overall. Quite a lot in my opinion. Don't know if this really is the truth or if i misunderstood some of the measurements veeam is providing. Also i would need to know the yearly changerate and i guess it's not as easy as just multiply the daily changerate with 365

Anyone has exprience with getting reliable information?

I can share my script is someone is interested.