-

kkuszek

- Enthusiast

- Posts: 92

- Liked: 6 times

- Joined: Mar 13, 2015 3:12 pm

- Full Name: Kurt Kuszek

- Contact:

v9 - Tape performance reports

I just started my first restore from tape since switching to v9 from v8. I need 1 file and as we know normal v8 is to read the whole tape.

it seems to have such a low processing rate to me though. The restore has been running for 18 hours and is at 18%. 100 hours for a restore? processing is pretty steady around 23 MB/S but this is dumping to idle local disk array so I struggle to see why streaming from tape isn't saturating the lto drive speed or even coming closer than 10%.

Funny enough since the job started I kicked off a daily incremental to tape and that's rockin on at 150MB/S while not changing the restore rate of the job in the other drive.

So other tape users - what kind of restore rates are you seeing and what's your configuration? Is this kind of processing rate typical to you?

it seems to have such a low processing rate to me though. The restore has been running for 18 hours and is at 18%. 100 hours for a restore? processing is pretty steady around 23 MB/S but this is dumping to idle local disk array so I struggle to see why streaming from tape isn't saturating the lto drive speed or even coming closer than 10%.

Funny enough since the job started I kicked off a daily incremental to tape and that's rockin on at 150MB/S while not changing the restore rate of the job in the other drive.

So other tape users - what kind of restore rates are you seeing and what's your configuration? Is this kind of processing rate typical to you?

-

veremin

- Product Manager

- Posts: 20746

- Liked: 2409 times

- Joined: Oct 26, 2012 3:28 pm

- Full Name: Vladimir Eremin

- Contact:

Re: v9 restore from tape: how fast does it process for you

What component is identified as a major bottleneck? And you're restoring to repository I guess, so what is the connection between tape server and repository?

-

kkuszek

- Enthusiast

- Posts: 92

- Liked: 6 times

- Joined: Mar 13, 2015 3:12 pm

- Full Name: Kurt Kuszek

- Contact:

Re: v9 restore from tape: how fast does it process for you

How do I view that on restore from tape job? I don't see the traditional statistics and bottleneck display?

-

kkuszek

- Enthusiast

- Posts: 92

- Liked: 6 times

- Joined: Mar 13, 2015 3:12 pm

- Full Name: Kurt Kuszek

- Contact:

Re: v9 restore from tape: how fast does it process for you

P.S.,

I included this in my last ticket.

The tape drive is directly connected to the vmware host running veeam and passed through to the veeam VM. This is on a dedicated host with a 6 disk dedicated local raid 10 array for storage.

I included this in my last ticket.

The tape drive is directly connected to the vmware host running veeam and passed through to the veeam VM. This is on a dedicated host with a 6 disk dedicated local raid 10 array for storage.

-

veremin

- Product Manager

- Posts: 20746

- Liked: 2409 times

- Joined: Oct 26, 2012 3:28 pm

- Full Name: Vladimir Eremin

- Contact:

Re: v9 restore from tape: how fast does it process for you

So, Virtual Machine having tape drive added as pass-through device to it is playing the role of tape server, what about repository server? Where is located in this scheme?

-

kkuszek

- Enthusiast

- Posts: 92

- Liked: 6 times

- Joined: Mar 13, 2015 3:12 pm

- Full Name: Kurt Kuszek

- Contact:

Re: v9 restore from tape: how fast does it process for you

Hi v.Eremin,

This is a rather small environment so backup duty is entirely within 1 machine. It's just an essentials plus deployment, nothing huge.

It's a single dell r710 running vmware, and veeam is the only vm on there with it. I actually have the HBA the tape drive is connected to added as a pass-through device because vmware does not support multi-lun targets strangely enough. The r710 has 6x 3.5in drive slots in the front I populated with 4tb hgst sas drives in raid 10.

This is a rather small environment so backup duty is entirely within 1 machine. It's just an essentials plus deployment, nothing huge.

It's a single dell r710 running vmware, and veeam is the only vm on there with it. I actually have the HBA the tape drive is connected to added as a pass-through device because vmware does not support multi-lun targets strangely enough. The r710 has 6x 3.5in drive slots in the front I populated with 4tb hgst sas drives in raid 10.

-

goletsa

- Novice

- Posts: 3

- Liked: 1 time

- Joined: Feb 12, 2016 9:31 am

- Full Name: Alexey Golets

- Contact:

Re: v9 restore from tape: how fast does it process for you

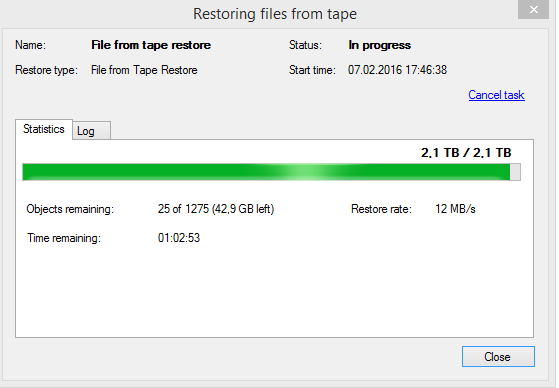

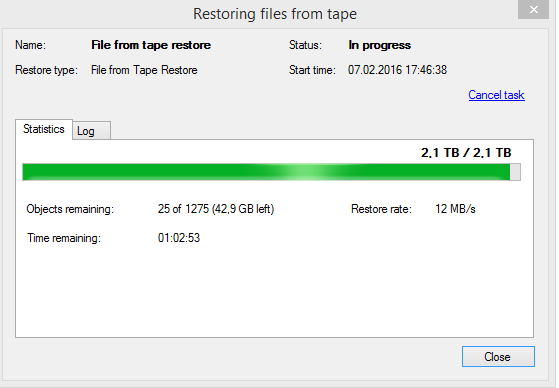

HP LTO4, SAS HBA, on the same machine, where veeam installed.

Write was about 100MB\s on Veeam v8

But restore rate only 20MB\s on v9 (Restore files from tape, about 400 files 1-3GB per tape (750GB), 3 tapes contain 2.1TB, 3days to restore).

Write was about 100MB\s on Veeam v8

But restore rate only 20MB\s on v9 (Restore files from tape, about 400 files 1-3GB per tape (750GB), 3 tapes contain 2.1TB, 3days to restore).

-

Dima P.

- Product Manager

- Posts: 15025

- Liked: 1881 times

- Joined: Feb 04, 2013 2:07 pm

- Full Name: Dmitry Popov

- Location: Prague

- Contact:

Re: v9 restore from tape: how fast does it process for you

Alexy,

What is the average size of the file? Can you open a case at support and let me know the case id, so I could share it with the dev team? Thank you in advance.Restore files from tape, about 400 files 1-3GB per tape (750GB)

-

mongie

- Expert

- Posts: 152

- Liked: 24 times

- Joined: May 16, 2011 4:00 am

- Full Name: Alex Macaronis

- Location: Brisbane, Australia

- Contact:

[MERGED] TL2000 Painfully Slow - v9

Trying to run tape backup jobs on a new V9 upgraded server.

This was slow in V8 and is just as slow in V9. 6Gb SAS conected LTO6 drive in Dell TL2000 library. I get write speeds of less than 20MB/s. Normally around 12MB/s. Target is the bottleneck... but I don't see why.

I'm wondering if there is anything I can do? Should I log a ticket with Dell or Veeam?

This was slow in V8 and is just as slow in V9. 6Gb SAS conected LTO6 drive in Dell TL2000 library. I get write speeds of less than 20MB/s. Normally around 12MB/s. Target is the bottleneck... but I don't see why.

I'm wondering if there is anything I can do? Should I log a ticket with Dell or Veeam?

-

veremin

- Product Manager

- Posts: 20746

- Liked: 2409 times

- Joined: Oct 26, 2012 3:28 pm

- Full Name: Vladimir Eremin

- Contact:

Re: TL2000 Painfully Slow - v9

What kind you job are you running? Files to tape or backups to tape one? What is used as a source for the tape job? Can you provide us with full bottleneck statistics (the one with percent distribution)? Thanks.

-

Lulworth

- Novice

- Posts: 3

- Liked: never

- Joined: Jan 27, 2016 2:01 pm

- Full Name: Matthew Bartlett

- Contact:

Re: TL2000 Painfully Slow - v9

I get the same here using a HP 1x8 G2 Autoloader using a LTO 6 drive connected via SAS.

I am running tests at the moment but I never get above 60MBs which is slow compared to what I used to get using backup exec.

An example load for a job is 14/02/2016 13:37:27 :: Load: Source 0% > Proxy 3% > Network 0% > Target 10%

I am running tests at the moment but I never get above 60MBs which is slow compared to what I used to get using backup exec.

An example load for a job is 14/02/2016 13:37:27 :: Load: Source 0% > Proxy 3% > Network 0% > Target 10%

-

kkuszek

- Enthusiast

- Posts: 92

- Liked: 6 times

- Joined: Mar 13, 2015 3:12 pm

- Full Name: Kurt Kuszek

- Contact:

Re: v9 restore from tape: how fast does it process for you

Dima,

I believe that is exactly what OP meant by their post. I interpreted that as each tape containing around 400 files, and they average between 1-3gb in size for each file with a total use of around 750gb per tape and the complete restore was 2.1TB in size.What is the average size of the file?

-

Dima P.

- Product Manager

- Posts: 15025

- Liked: 1881 times

- Joined: Feb 04, 2013 2:07 pm

- Full Name: Dmitry Popov

- Location: Prague

- Contact:

Re: TL2000 Painfully Slow - v9

Hi Matthew,

To clarify, are you backing files via File to tape job or backups via Backup to tape job? If it’s a file to tape job what is the average size of the files (just estimate for better understanding)? Thanks

To clarify, are you backing files via File to tape job or backups via Backup to tape job? If it’s a file to tape job what is the average size of the files (just estimate for better understanding)? Thanks

-

Lulworth

- Novice

- Posts: 3

- Liked: never

- Joined: Jan 27, 2016 2:01 pm

- Full Name: Matthew Bartlett

- Contact:

Re: TL2000 Painfully Slow - v9

HI Dima,

I am using File to Tape. I am copying to tape the backups made from Veeam to my storage area. These are the VBK, VIB and VRB files and vary in size between 10's of MB to 600GB in size so not a lot of small files.

It's currently taking 25 hours to transfer 2.6TB.

Thanks,

Matt

I am using File to Tape. I am copying to tape the backups made from Veeam to my storage area. These are the VBK, VIB and VRB files and vary in size between 10's of MB to 600GB in size so not a lot of small files.

It's currently taking 25 hours to transfer 2.6TB.

Thanks,

Matt

-

Dima P.

- Product Manager

- Posts: 15025

- Liked: 1881 times

- Joined: Feb 04, 2013 2:07 pm

- Full Name: Dmitry Popov

- Location: Prague

- Contact:

Re: v9 - Tape performance reports

Guys,

I’ve merged both performance report threads into one for tracking purposes. Support and QA teams will review all cases posted above.

To all newcomers.

If you see the performance decrease in file to tape job, backup to tape job or restore from tape please open a support case and post the case ID – we will check it. So, please provide:

0. Support case ID

1. Background on your infrastructure setup (i.e. type of the library, how it's connected to tape proxy, generation of tape drives)

2. What type of tape job were you using during the performance testing (file to tape / backup to tape / file from tape restore / backup from tape restore)

3. Bottleneck stats from job details

4. Average size of the file and number of backed up/restored files (a rough estimate is good enough)

5. Any other useful information

Thanks.

I’ve merged both performance report threads into one for tracking purposes. Support and QA teams will review all cases posted above.

To all newcomers.

If you see the performance decrease in file to tape job, backup to tape job or restore from tape please open a support case and post the case ID – we will check it. So, please provide:

0. Support case ID

1. Background on your infrastructure setup (i.e. type of the library, how it's connected to tape proxy, generation of tape drives)

2. What type of tape job were you using during the performance testing (file to tape / backup to tape / file from tape restore / backup from tape restore)

3. Bottleneck stats from job details

4. Average size of the file and number of backed up/restored files (a rough estimate is good enough)

5. Any other useful information

Thanks.

-

mongie

- Expert

- Posts: 152

- Liked: 24 times

- Joined: May 16, 2011 4:00 am

- Full Name: Alex Macaronis

- Location: Brisbane, Australia

- Contact:

Re: v9 - Tape performance reports

I've logged a ticket now... #01700980

-

larkrist

- Novice

- Posts: 9

- Liked: 3 times

- Joined: Feb 18, 2016 8:22 pm

- Full Name: Lars Kristoffersen

- Contact:

[MERGED] : Tape LTO4 Expected speed?

I wonder if there is something we can do to speed up the Backup to Tape Job.

We are having a StoreOnce with Catalyst-FC for first backup store. Then we want to copy this to an LTO4 TL also running on FC. Proxy has access to both.

We are getting a speed of 50MB/s

We are having a StoreOnce with Catalyst-FC for first backup store. Then we want to copy this to an LTO4 TL also running on FC. Proxy has access to both.

We are getting a speed of 50MB/s

-

skrause

- Veteran

- Posts: 487

- Liked: 107 times

- Joined: Dec 08, 2014 2:58 pm

- Full Name: Steve Krause

- Contact:

Re: Tape LTO4 Expected speed?

What does the Veeam console say is the bottleneck?

Also, are you using encryption? Software encryption puts a pretty big penalty on tape performance.

Also, are you using encryption? Software encryption puts a pretty big penalty on tape performance.

Steve Krause

Veeam Certified Architect

Veeam Certified Architect

-

veremin

- Product Manager

- Posts: 20746

- Liked: 2409 times

- Joined: Oct 26, 2012 3:28 pm

- Full Name: Vladimir Eremin

- Contact:

Re: v9 - Tape performance reports

Hi, Lars,

We try to keep all posts about tape jobs' performance within one thread for the purpose of convenience.

Please, follow the recommendations given by Dmitry.

Thanks.

We try to keep all posts about tape jobs' performance within one thread for the purpose of convenience.

Please, follow the recommendations given by Dmitry.

Thanks.

-

redhorse

- Expert

- Posts: 229

- Liked: 13 times

- Joined: Feb 19, 2013 8:08 am

- Full Name: RH

- Location: Germany

- Contact:

Re: v9 - Tape performance reports

I do have the same situation:

1. Dell TL2000 with LTO5-Tapes, connected to physical Veeam-Server with all roles

2. File-to-Tape

3. Bottleneck-Detecting with no result

4. About one million files with average file size of 250 KByte

The processing rate is about 16 MB/s, is that normal?

I have to restore all the old file backups from backup exec to disk and then backup to tape with Veeam. Because it takes so much time my idea is to backup the volume with the restored files with Endpoint Protection and then to tape, does that make sense?

1. Dell TL2000 with LTO5-Tapes, connected to physical Veeam-Server with all roles

2. File-to-Tape

3. Bottleneck-Detecting with no result

4. About one million files with average file size of 250 KByte

The processing rate is about 16 MB/s, is that normal?

I have to restore all the old file backups from backup exec to disk and then backup to tape with Veeam. Because it takes so much time my idea is to backup the volume with the restored files with Endpoint Protection and then to tape, does that make sense?

-

veremin

- Product Manager

- Posts: 20746

- Liked: 2409 times

- Joined: Oct 26, 2012 3:28 pm

- Full Name: Vladimir Eremin

- Contact:

Re: v9 - Tape performance reports

And you are already on version 9, right? If so, feel free to open a ticket with our support team and post its number here so that we can follow the case and check whether the performance you see is normal indeed. Thanks.

-

redhorse

- Expert

- Posts: 229

- Liked: 13 times

- Joined: Feb 19, 2013 8:08 am

- Full Name: RH

- Location: Germany

- Contact:

Re: v9 - Tape performance reports

Yes V9, Case #01713666

Now, I started a file level backup with Veeam EP, this is faster and the file copy with one large file to tape should be much faster.

Now, I started a file level backup with Veeam EP, this is faster and the file copy with one large file to tape should be much faster.

-

rreed

- Veteran

- Posts: 354

- Liked: 73 times

- Joined: Jun 30, 2015 6:06 pm

- Contact:

Re: v9 - Tape performance reports

#01715660 for us. It's always been the same in v8 and in v9. Pulling across the network yields ~30-70 MB/s to our LTO-6. Pulling from the tape server's local HDD ran its full 120MB/s in v8, and v9 somehow reports 240MB/s from local HDD(?). File or Backup, doesn't matter. (4) x 1Gbps LAG, and I get that it's likely only running one data stream so only one NIC, but 30-70 MB/s doesn't saturate a 1Gbps link. Target is always the bottleneck w/ all jobs (File and Backup); Source 0%, Proxy 5-10%, Network 0%, Target 15% thereabouts across all network tape jobs.

VMware 6

Veeam B&R v9

Dell DR4100's

EMC DD2200's

EMC DD620's

Dell TL2000 via PE430 (SAS)

Veeam B&R v9

Dell DR4100's

EMC DD2200's

EMC DD620's

Dell TL2000 via PE430 (SAS)

-

rreed

- Veteran

- Posts: 354

- Liked: 73 times

- Joined: Jun 30, 2015 6:06 pm

- Contact:

Re: v9 - Tape performance reports

Question while we're on the topic of v9 tape performance, how is the processing rate calculated in the Statistics dialog box? Reason I ask is because we're running LTO-6, and in v8 when we'd run jobs off the tape server's HDD it would report right around 120 MB/s which is LTO-6's advertised speed. In v8 doing the same thing reports up through 250+ MB/s running one single tape drive! Do we have a bug there or ?

VMware 6

Veeam B&R v9

Dell DR4100's

EMC DD2200's

EMC DD620's

Dell TL2000 via PE430 (SAS)

Veeam B&R v9

Dell DR4100's

EMC DD2200's

EMC DD620's

Dell TL2000 via PE430 (SAS)

-

Dima P.

- Product Manager

- Posts: 15025

- Liked: 1881 times

- Joined: Feb 04, 2013 2:07 pm

- Full Name: Dmitry Popov

- Location: Prague

- Contact:

Re: v9 - Tape performance reports

Hi rreed,

This might be a bug indeed. I’ve seen several reports where tape average performance was not correctly calculated, so it’s better to report this issue to support team and let them investigate the logs.

This might be a bug indeed. I’ve seen several reports where tape average performance was not correctly calculated, so it’s better to report this issue to support team and let them investigate the logs.

-

cerberus

- Expert

- Posts: 172

- Liked: 21 times

- Joined: Aug 28, 2015 2:45 pm

- Full Name: Mirza

- Contact:

[MERGED] Veeam v9 B2T to dual LTO7 drives slow?

Hello,

We have a Dell ML6020 LTO7 tape library (dual LTO7 drives) connected using 8gb FC to our dedicated backup server with local storage (8x4TB 7.2k RPM NLSAS in RAID10) with a 1.75TB SSD write I/O caching layer in our new Dell R730xd.

The RAID10 is formatted with 512kb stripe size and the NTFS volume is formatted with 64kb cluster size. Our tape drives are set to use native SCSI using largest block size for tape (524288).

We have 2 B2D jobs setup so that we can write to both tape drives at once (I know that in v9 there is the possibility to use single job to write to both tapes but I found the fill rate on tape was not acceptable so I am using two jobs). The local repository is setup for per-VM backup files.

The B2D jobs finish fast and in acceptable time (backing up VMware over FC using CBT). The B2T job is not so great, single LTO7 should go 300MB/s (600MB/s combined) but I am finding that I am barely getting the throughput to reach those numbers. Observing the B2T job I notice a very interesting DROP in performance when both jobs are running. If a single job is running it gets upwards of 270MB/s+.

This is a reverse incremental job, so last file is always the full VBK and since this is all fairly new (only 5 backup restore points) there shouldn't be much of fragmentation causing random reads. I would assume the B2T would be a very linear read opearation in this case.

I found kb2014 and ran diskspd tests, below they are..

- Reverse Incremental (notice the read here, through the roof) http://pastebin.com/1dRHPv7i

- Random Read http://pastebin.com/XHkfXfgM

- Linear Read http://pastebin.com/iKu8jfbH

- HDTune yields about 700MB/s read

Graph of first B2T job... http://imgur.com/VJyUMnc

Graph of 2nd B2T job... http://imgur.com/touOW7P

Both jobs show Target as bottleneck (37%) and also Proxy is up there right around 35%. Veeam v9 has all roles installed on a single dedicated backup server with local storage and tape library zoned through our brocade fc switch.

I spoke to Dell as well and they ran IBM's ITDT tool on both drives at same time and they were both getting 280MB/s in parallel.

No idea what to do about the complete performance drop in one of the B2T jobs when both are running in parallel. Any ideas what the issue is or what I could do to yield more out of both of our new LTO7 drives?

Case #01719643

We have a Dell ML6020 LTO7 tape library (dual LTO7 drives) connected using 8gb FC to our dedicated backup server with local storage (8x4TB 7.2k RPM NLSAS in RAID10) with a 1.75TB SSD write I/O caching layer in our new Dell R730xd.

The RAID10 is formatted with 512kb stripe size and the NTFS volume is formatted with 64kb cluster size. Our tape drives are set to use native SCSI using largest block size for tape (524288).

We have 2 B2D jobs setup so that we can write to both tape drives at once (I know that in v9 there is the possibility to use single job to write to both tapes but I found the fill rate on tape was not acceptable so I am using two jobs). The local repository is setup for per-VM backup files.

The B2D jobs finish fast and in acceptable time (backing up VMware over FC using CBT). The B2T job is not so great, single LTO7 should go 300MB/s (600MB/s combined) but I am finding that I am barely getting the throughput to reach those numbers. Observing the B2T job I notice a very interesting DROP in performance when both jobs are running. If a single job is running it gets upwards of 270MB/s+.

This is a reverse incremental job, so last file is always the full VBK and since this is all fairly new (only 5 backup restore points) there shouldn't be much of fragmentation causing random reads. I would assume the B2T would be a very linear read opearation in this case.

I found kb2014 and ran diskspd tests, below they are..

- Reverse Incremental (notice the read here, through the roof) http://pastebin.com/1dRHPv7i

- Random Read http://pastebin.com/XHkfXfgM

- Linear Read http://pastebin.com/iKu8jfbH

- HDTune yields about 700MB/s read

Graph of first B2T job... http://imgur.com/VJyUMnc

Graph of 2nd B2T job... http://imgur.com/touOW7P

Both jobs show Target as bottleneck (37%) and also Proxy is up there right around 35%. Veeam v9 has all roles installed on a single dedicated backup server with local storage and tape library zoned through our brocade fc switch.

I spoke to Dell as well and they ran IBM's ITDT tool on both drives at same time and they were both getting 280MB/s in parallel.

No idea what to do about the complete performance drop in one of the B2T jobs when both are running in parallel. Any ideas what the issue is or what I could do to yield more out of both of our new LTO7 drives?

Case #01719643

-

alanbolte

- Veteran

- Posts: 635

- Liked: 174 times

- Joined: Jun 18, 2012 8:58 pm

- Full Name: Alan Bolte

- Contact:

[MERGED] Re: Veeam v9 B2T to dual LTO7 drives slow?

What happens if you run two instances of the diskspd linear read test concurrently? Or if you run diskspd reading from one file while the tape job copies a different file to tape?

-

cerberus

- Expert

- Posts: 172

- Liked: 21 times

- Joined: Aug 28, 2015 2:45 pm

- Full Name: Mirza

- Contact:

[MERGED] Re: Veeam v9 B2T to dual LTO7 drives slow?

I ran two instances of diskspd.exe while matching the -b (blocksize) to be the same as the setting on the backup to disk which is set to "Local target" and compression is "Optimal" This means 1024K block size on disk for Veeam files.

Both jobs running simultaneously are getting 400MB/s+.

Code: Select all

Command Line: diskspd.exe -b1024K -h -d300 D:\Backups\Nightly Backup job for Servers\xxx-xxx.vm-1952016-03-08T193037.vbk

Total IO

thread | bytes | I/Os | MB/s | I/O per s | file

------------------------------------------------------------------------------

0 | 133787811840 | 127590 | 425.29 | 425.29 | D:\Backups\Nightly Backup job for Servers\xxx-xxx.vm-1952016-03-08T193037.vbk (38GB)

------------------------------------------------------------------------------

total: 133787811840 | 127590 | 425.29 | 425.29

Read IO

thread | bytes | I/Os | MB/s | I/O per s | file

------------------------------------------------------------------------------

0 | 133787811840 | 127590 | 425.29 | 425.29 | D:\Backups\Nightly Backup job for Servers\xxx-xxx.vm-1952016-03-08T193037.vbk (38GB)

------------------------------------------------------------------------------

total: 133787811840 | 127590 | 425.29 | 425.29

Write IO

thread | bytes | I/Os | MB/s | I/O per s | file

------------------------------------------------------------------------------

0 | 0 | 0 | 0.00 | 0.00 | D:\Backups\Nightly Backup job for Servers\xxx-xxx.vm-1952016-03-08T193037.vbk (38GB)

------------------------------------------------------------------------------

total: 0 | 0 | 0.00 | 0.00

Code: Select all

Command Line: diskspd.exe -b1024K -h -d300 D:\Backups\Nightly Backup job for Applications\xxx-xxx.vm-2002016-03-08T190028.vbk

Total IO

thread | bytes | I/Os | MB/s | I/O per s | file

------------------------------------------------------------------------------

0 | 137800712192 | 131417 | 438.05 | 438.05 | D:\Backups\Nightly Backup job for Applications\xxx-xxx.vm-2002016-03-08T190028.vbk (90GB)

------------------------------------------------------------------------------

total: 137800712192 | 131417 | 438.05 | 438.05

Read IO

thread | bytes | I/Os | MB/s | I/O per s | file

------------------------------------------------------------------------------

0 | 137800712192 | 131417 | 438.05 | 438.05 | D:\Backups\Nightly Backup job for Applications\xxx-xxx.vm-2002016-03-08T190028.vbk (90GB)

------------------------------------------------------------------------------

total: 137800712192 | 131417 | 438.05 | 438.05

Write IO

thread | bytes | I/Os | MB/s | I/O per s | file

------------------------------------------------------------------------------

0 | 0 | 0 | 0.00 | 0.00 | D:\Backups\Nightly Backup job for Applications\xxx-xxx.vm-2002016-03-08T190028.vbk (90GB)

------------------------------------------------------------------------------

total: 0 | 0 | 0.00 | 0.00

-

msmith_uk

- Lurker

- Posts: 2

- Liked: never

- Joined: Apr 01, 2016 3:31 pm

- Full Name: matt smith

- Contact:

[MERGED] Veeam Slow backup to tape

Hi all,

Im having a issue with my new veeam setup so far support have not been able to assist with resolving this, i hoped someone may have seen it or have a idea

Basically when backing up to tape we are getting speeds of 14mbs on Veeam, to compare Backup Exec we was getting around 50mbs.

Our setup is as follows Veeam backing up to the disk on the SAN from a physical server with a dell TL2000 attached to the physical via iSCSI backups the 'to disk jobs' to tape.

the only thing i have seen is it feels as though it has capped itself at 14mbs. though there are no options to show so.

Any pointers would be greatly appreciated.

Thanks

Im having a issue with my new veeam setup so far support have not been able to assist with resolving this, i hoped someone may have seen it or have a idea

Basically when backing up to tape we are getting speeds of 14mbs on Veeam, to compare Backup Exec we was getting around 50mbs.

Our setup is as follows Veeam backing up to the disk on the SAN from a physical server with a dell TL2000 attached to the physical via iSCSI backups the 'to disk jobs' to tape.

the only thing i have seen is it feels as though it has capped itself at 14mbs. though there are no options to show so.

Any pointers would be greatly appreciated.

Thanks

-

Dima P.

- Product Manager

- Posts: 15025

- Liked: 1881 times

- Joined: Feb 04, 2013 2:07 pm

- Full Name: Dmitry Popov

- Location: Prague

- Contact:

Who is online

Users browsing this forum: No registered users and 7 guests