-

dkvello

- Service Provider

- Posts: 108

- Liked: 14 times

- Joined: Jan 01, 2006 1:01 am

- Full Name: Dag Kvello

- Location: Oslo, Norway

- Contact:

ReFS 3.1 goodies and Scale Out Repositories

So, how does this work when You use scale out repo's ?

Particularly together with the Performance Policy option.

Particularly together with the Performance Policy option.

-

dellock6

- Veeam Software

- Posts: 6216

- Liked: 1999 times

- Joined: Jul 26, 2009 3:39 pm

- Full Name: Luca Dell'Oca

- Location: Varese, Italy

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

I suggest to stick to data locality mode, otherwise you may lose the benefits of block cloning for synthetic fulls, as block still have to be physically moved from one extent to the other. Think about sobr+refs exactly like grouping multiple dedup appliances like datadomain or storeonce in a sobr, it can be done, but the benefits of block cloning are there only if the entire chain is in the same repository.

Other than that, SOBR with multiple refs extents is a great design, probably only second to the use of Storage Space Direct.

Other than that, SOBR with multiple refs extents is a great design, probably only second to the use of Storage Space Direct.

Luca Dell'Oca

Principal EMEA Cloud Architect @ Veeam Software

@dellock6

https://www.virtualtothecore.com/

vExpert 2011 -> 2022

Veeam VMCE #1

Principal EMEA Cloud Architect @ Veeam Software

@dellock6

https://www.virtualtothecore.com/

vExpert 2011 -> 2022

Veeam VMCE #1

-

dkvello

- Service Provider

- Posts: 108

- Liked: 14 times

- Joined: Jan 01, 2006 1:01 am

- Full Name: Dag Kvello

- Location: Oslo, Norway

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

I had my suspicions

So, S2D with ReFS 3.1 is preferable to Scale Out repo's in 9.5 ?

So, S2D with ReFS 3.1 is preferable to Scale Out repo's in 9.5 ?

-

tsightler

- VP, Product Management

- Posts: 6053

- Liked: 2874 times

- Joined: Jun 05, 2009 12:57 pm

- Full Name: Tom Sightler

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

Simple answer: it doesn't!

More complex answer, obviously to be able to use block clone the blocks have to be on the same ReFS volume. Since SOBR represents extents of different storage, there's no real way to take advantage of ReFS enhanced synthetics with this configuration. To use ReFS + SOBR it's highly recommend to use data locality policy, however, even in this case there's a few things to look out for, specifically, if any extent fills up, and backups are redirected to other nodes, you will lose the enhanced capabilities for merge and/or GFS. Also, if you evacuate nodes, block clone is not preserved, so if you have mulitple block cloned synthetic fulls in a chain, they will require space for each full in the target.

More complex answer, obviously to be able to use block clone the blocks have to be on the same ReFS volume. Since SOBR represents extents of different storage, there's no real way to take advantage of ReFS enhanced synthetics with this configuration. To use ReFS + SOBR it's highly recommend to use data locality policy, however, even in this case there's a few things to look out for, specifically, if any extent fills up, and backups are redirected to other nodes, you will lose the enhanced capabilities for merge and/or GFS. Also, if you evacuate nodes, block clone is not preserved, so if you have mulitple block cloned synthetic fulls in a chain, they will require space for each full in the target.

-

tsightler

- VP, Product Management

- Posts: 6053

- Liked: 2874 times

- Joined: Jun 05, 2009 12:57 pm

- Full Name: Tom Sightler

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

Just remember that it has it's own limitations, like nodes have to be very similar, and all backup data within a single share has to traverse in/out via the owner node, so you can't fully exploit the bandwidth of all nodes without also creating shares spread across multiple nodes, but then you'd still need separate repos (potentially aggregated with SOBR) for these shares and block clone won't work across these separate extents either.dkvello wrote:So, S2D with ReFS 3.1 is preferable to Scale Out repo's in 9.5 ?

-

dkvello

- Service Provider

- Posts: 108

- Liked: 14 times

- Joined: Jan 01, 2006 1:01 am

- Full Name: Dag Kvello

- Location: Oslo, Norway

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

Yeah, so it's a bit of back to square one again. To get scalability (both size and parallel I/O) AND all ReFS goods I have to move away from both Scale Out Repo's (both Locality and Performance policies) and S2D and start "partioning" my backup-jobs again. These jobs to that single-volume repository and so forth.

I consider the "One node does all the writing" topology of S2D a major design-flaw of a scale-out storage system.

I consider the "One node does all the writing" topology of S2D a major design-flaw of a scale-out storage system.

-

Gostev

- former Chief Product Officer (until 2026)

- Posts: 33084

- Liked: 8179 times

- Joined: Jan 01, 2006 1:01 am

- Location: Baar, Switzerland

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

Why move away from SOBR? With per-VM chains and Data Locality policy you gets both scalability AND ReFS goods.dkvello wrote:To get scalability (both size and parallel I/O) AND all ReFS goods I have to move away from both Scale Out Repo's

-

dkvello

- Service Provider

- Posts: 108

- Liked: 14 times

- Joined: Jan 01, 2006 1:01 am

- Full Name: Dag Kvello

- Location: Oslo, Norway

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

Well, If i wanted to use S2D I would have to.

I'll drop S2D and switch my Performance SOBR to Locality SOBR and use ReFS 3.1 - I allready user per-vm chains.

I'll drop S2D and switch my Performance SOBR to Locality SOBR and use ReFS 3.1 - I allready user per-vm chains.

-

dellock6

- Veeam Software

- Posts: 6216

- Liked: 1999 times

- Joined: Jul 26, 2009 3:39 pm

- Full Name: Luca Dell'Oca

- Location: Varese, Italy

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

There is still a design case for S2D and SOBR together, let me explain quickly: with S2D, even if you have a scale-out cluster, one volume is owned at a given point by only one of the nodes, so CPU and Memory used to run that volume is comparable to a single SS server, with "just" mode disk space in the backend coming from the other nodes. A suggestion for the design is to create at a minimum the same amount of volumes as the number of nodes you have. This is because S2D technology will automatically spread the ownership of the volumes to every possible node. So say you have 4 nodes and 4 volumes, each node at a given point in time will own a volume. In this way you will be able to leverage the compute power of the entire cluster.

But then you have again 4 different volumes, and here is where SOBR comes into play to aggregate those volumes into the same entity.

Luca

But then you have again 4 different volumes, and here is where SOBR comes into play to aggregate those volumes into the same entity.

Luca

Luca Dell'Oca

Principal EMEA Cloud Architect @ Veeam Software

@dellock6

https://www.virtualtothecore.com/

vExpert 2011 -> 2022

Veeam VMCE #1

Principal EMEA Cloud Architect @ Veeam Software

@dellock6

https://www.virtualtothecore.com/

vExpert 2011 -> 2022

Veeam VMCE #1

-

tsightler

- VP, Product Management

- Posts: 6053

- Liked: 2874 times

- Joined: Jun 05, 2009 12:57 pm

- Full Name: Tom Sightler

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

I have exactly this setup in my lab, but I'd say it sounds better in theory than practice. The problem with this model is that you can't really aggregate the space, you still end up with 4 different volumes, and if SOBR fills up one of those volumes and spills over to another, the benefits of ReFS block clone are lost and then you risk even more space when the next synthetic operation occurs. Effectively, you lose the ability to leverage block clone across the entirety of the storage, and instead can only use it within sub-pools of that storage, and you risk possible cascading effects if any one extent overruns. At that point it's difficult to understand how S2D + SOBR is better than just standalone servers + SOBR, other than some obvious availability advantages if you were to lose a node.

-

dellock6

- Veeam Software

- Posts: 6216

- Liked: 1999 times

- Joined: Jul 26, 2009 3:39 pm

- Full Name: Luca Dell'Oca

- Location: Varese, Italy

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

Indeed the choice is a bit hard to do, I see two advantages in this design tough:

- being able to expand a volume via scaling-out and still keep the single namespace, but with SOBR and per-vm chains this is becoming less a problem

- redundancy, has I have multiple copies of the blocks spread over the S2D cluster, while SOBR is more a federation of single storage systems, but at any point in time I only have one copy of the data. But again, storage spaces with mirror or parity and integrity streams makes this issue less a problem.

For now I'm suggesting multiple single storage spaces machines grouped together with SOBR. Also because, the other issue is that this latter design only requires Windows 2016 standard edition, while S2D needs the Datacenter edition. It's not a HUGE difference, but still it's a difference that may impact some customer design.

- being able to expand a volume via scaling-out and still keep the single namespace, but with SOBR and per-vm chains this is becoming less a problem

- redundancy, has I have multiple copies of the blocks spread over the S2D cluster, while SOBR is more a federation of single storage systems, but at any point in time I only have one copy of the data. But again, storage spaces with mirror or parity and integrity streams makes this issue less a problem.

For now I'm suggesting multiple single storage spaces machines grouped together with SOBR. Also because, the other issue is that this latter design only requires Windows 2016 standard edition, while S2D needs the Datacenter edition. It's not a HUGE difference, but still it's a difference that may impact some customer design.

Luca Dell'Oca

Principal EMEA Cloud Architect @ Veeam Software

@dellock6

https://www.virtualtothecore.com/

vExpert 2011 -> 2022

Veeam VMCE #1

Principal EMEA Cloud Architect @ Veeam Software

@dellock6

https://www.virtualtothecore.com/

vExpert 2011 -> 2022

Veeam VMCE #1

-

tsightler

- VP, Product Management

- Posts: 6053

- Liked: 2874 times

- Joined: Jun 05, 2009 12:57 pm

- Full Name: Tom Sightler

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

Sure, I agree on the redundancy/availability, but don't follow regarding a single namespace as what we are specifically talking about is that you need a volume for every node so expanding via scale out requires adding a volume, and thus adding a new namespace, not expanding an existing one, unless you decide you don't care about using the new node for ingest.

I see potential in the design, but it's just not quite ideal. If Veeam could run the repo agents on the nodes directly, and target the local CSV on each node, now then we'd have something! I actually tried to do this, but Veeam is confused by the CSV and thinks it has no space, and even so, without some enhancements it wouldn't know it was the same ReFS volume and could leverage block clone.

I see potential in the design, but it's just not quite ideal. If Veeam could run the repo agents on the nodes directly, and target the local CSV on each node, now then we'd have something! I actually tried to do this, but Veeam is confused by the CSV and thinks it has no space, and even so, without some enhancements it wouldn't know it was the same ReFS volume and could leverage block clone.

-

DaStivi

- Veeam Legend

- Posts: 453

- Liked: 86 times

- Joined: Jun 30, 2015 9:13 am

- Full Name: Stephan Lang

- Location: Austria

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

Hi, how is REFS Block cloning behaving when using dynamic disks in windows, and extending the disks with new refs volumes?

when extending an sobr with a new refs volume, and choosing "Data locality", will the next backup will be than a full when the space on the 1st volume is full?

when extending an sobr with a new refs volume, and choosing "Data locality", will the next backup will be than a full when the space on the 1st volume is full?

-

Gostev

- former Chief Product Officer (until 2026)

- Posts: 33084

- Liked: 8179 times

- Joined: Jan 01, 2006 1:01 am

- Location: Baar, Switzerland

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

All ReFS goodies are within a single ReFS volume only.

-

DaStivi

- Veeam Legend

- Posts: 453

- Liked: 86 times

- Joined: Jun 30, 2015 9:13 am

- Full Name: Stephan Lang

- Location: Austria

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

ok thx... i thougt that...

and what about sobr and the policies, hows Veeam behaving when one extend is getting full on disk space, is Veeam dooing a new full on the next extend and then utilize the refs goodies again on next incr. backup?

and what about sobr and the policies, hows Veeam behaving when one extend is getting full on disk space, is Veeam dooing a new full on the next extend and then utilize the refs goodies again on next incr. backup?

-

DaStivi

- Veeam Legend

- Posts: 453

- Liked: 86 times

- Joined: Jun 30, 2015 9:13 am

- Full Name: Stephan Lang

- Location: Austria

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

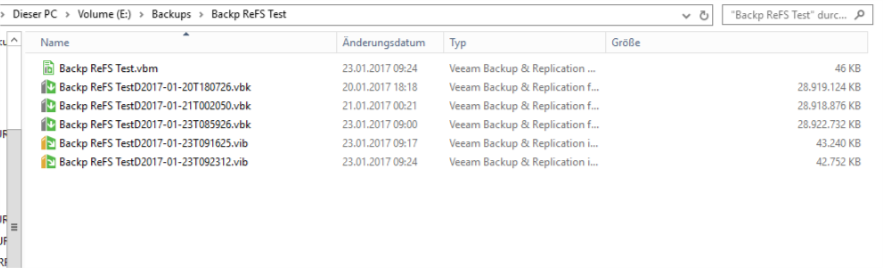

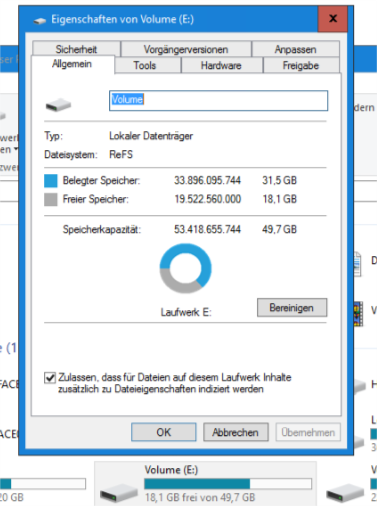

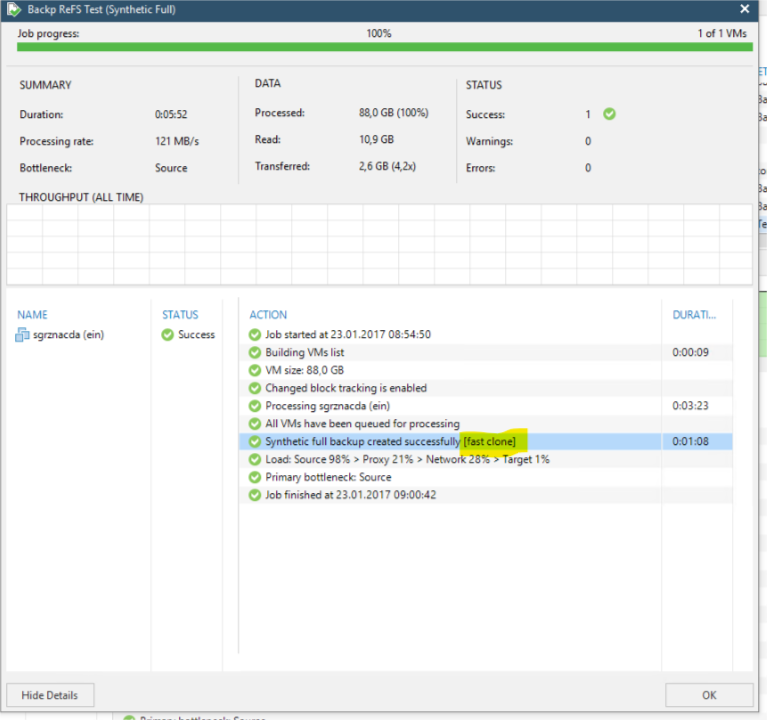

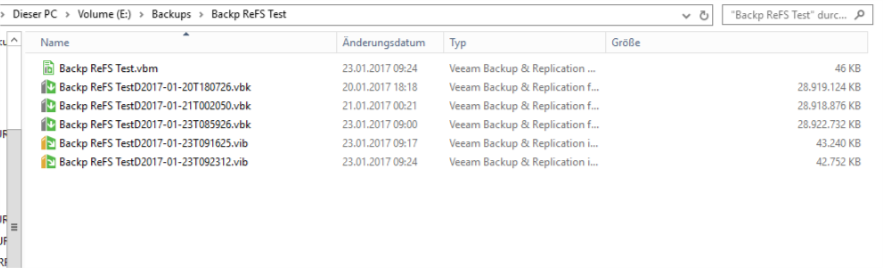

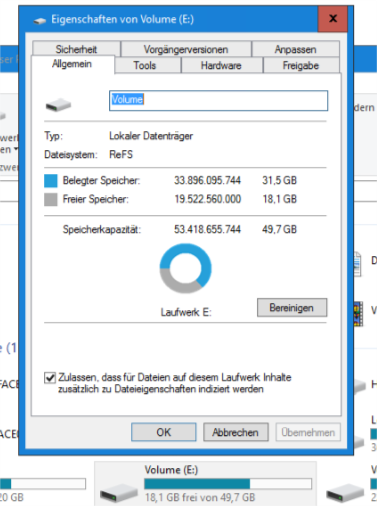

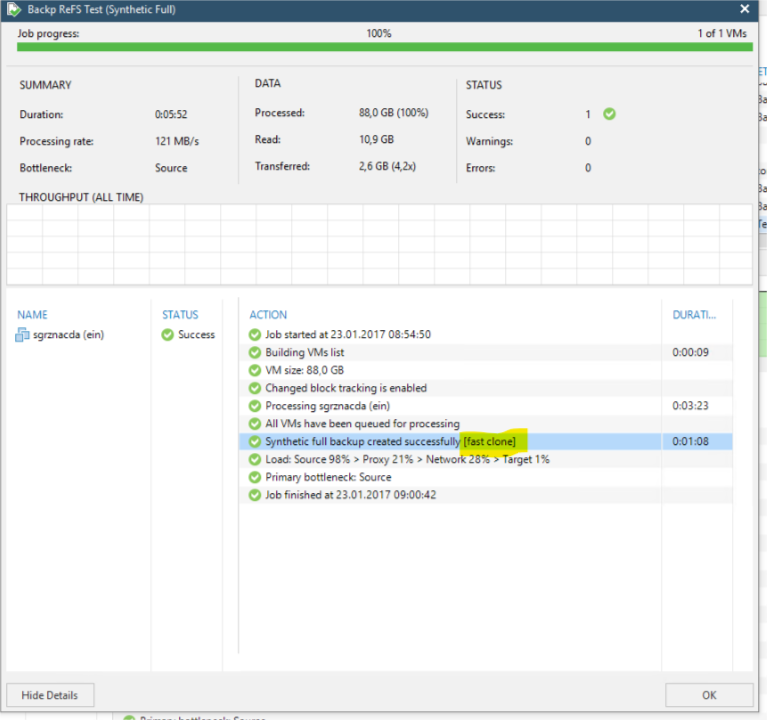

my first tests with windows dynamic spanned disks looks very promising... i did a couple of job runs now.. and had 2 syn fulls now... Veeam showed them as fast clone and i can see 3 "full" vbks that exceed the total amount of space available!

-

dellock6

- Veeam Software

- Posts: 6216

- Liked: 1999 times

- Joined: Jul 26, 2009 3:39 pm

- Full Name: Luca Dell'Oca

- Location: Varese, Italy

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

Spanned volumes should be indeed completely transparent to ReFS. I've not heard about any limit of warning from Microsoft, so it should be supported. But maybe other microsoft guys may comment on it better.

Luca Dell'Oca

Principal EMEA Cloud Architect @ Veeam Software

@dellock6

https://www.virtualtothecore.com/

vExpert 2011 -> 2022

Veeam VMCE #1

Principal EMEA Cloud Architect @ Veeam Software

@dellock6

https://www.virtualtothecore.com/

vExpert 2011 -> 2022

Veeam VMCE #1

-

foggy

- Veeam Software

- Posts: 21208

- Liked: 2179 times

- Joined: Jul 11, 2011 10:22 am

- Full Name: Alexander Fogelson

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

SOBR is also transparent to ReFS in this sense, the only limitation is where you need to perform full after evacuation to ReFS volume to start getting ReFS benefits (will be addressed in future).DaStivi wrote:and what about sobr and the policies, hows Veeam behaving when one extend is getting full on disk space, is Veeam dooing a new full on the next extend and then utilize the refs goodies again on next incr. backup?

-

DaStivi

- Veeam Legend

- Posts: 453

- Liked: 86 times

- Joined: Jun 30, 2015 9:13 am

- Full Name: Stephan Lang

- Location: Austria

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

gostev wrotes on 1st page that fastclone is not used in sobr extends... and i would totalyunderstand that, as block clone api is a refs filesystem feature and the refs filesystem does not know about the extend in veeam..... instead in windows dynamic discs, refs indeed know about all the blocks, as its basically one filesystem that can manage every block on wathever volume its expanded too...

the question initially was, when using sobr and either "data locality" or "performance" sobr-policy how refs block clone (fast clone) is used... when using data locality everything is written to the first extend, and synfulls are using fast clone, but what happens when the next extend is added/used... how does ah synfull than work? still using fast clone (i guess not, and thats also whats written on the first page), so that means a "non-fastclone" synfull.vbk is written to the new extend and after that the the next synfull would be fastclone again... thats exactly what the users/admins don't want...

in my example i present NetApp LUNs to my Veeam Server, and the max LUN sitze is 16TB.... so i have to have a way to extend the refs volume without loosing, or doing duplicated fulls, as these fulls again take 5TB space! and i would loose 1/3 of the size from the new store instantly again!

the question initially was, when using sobr and either "data locality" or "performance" sobr-policy how refs block clone (fast clone) is used... when using data locality everything is written to the first extend, and synfulls are using fast clone, but what happens when the next extend is added/used... how does ah synfull than work? still using fast clone (i guess not, and thats also whats written on the first page), so that means a "non-fastclone" synfull.vbk is written to the new extend and after that the the next synfull would be fastclone again... thats exactly what the users/admins don't want...

in my example i present NetApp LUNs to my Veeam Server, and the max LUN sitze is 16TB.... so i have to have a way to extend the refs volume without loosing, or doing duplicated fulls, as these fulls again take 5TB space! and i would loose 1/3 of the size from the new store instantly again!

-

foggy

- Veeam Software

- Posts: 21208

- Liked: 2179 times

- Joined: Jul 11, 2011 10:22 am

- Full Name: Alexander Fogelson

- Contact:

Re: ReFS 3.1 goodies and Scale Out Repositories

I didn't say it works between the extents, just confirmed your statement that it works within a single volume only. So the below quote is true:

DaStivi wrote:so that means a "non-fastclone" synfull.vbk is written to the new extend and after that the the next synfull would be fastclone again...

Who is online

Users browsing this forum: Bing [Bot] and 84 guests