-

albertwt

- Veteran

- Posts: 966

- Liked: 55 times

- Joined: Nov 05, 2009 12:24 pm

- Location: Sydney, NSW

- Contact:

Backup mode and setting best practice for large File server?

Hi All,

Can anyone here please share some backup configuration tips for large file server VM ?

I have several large VMs running file server (larger than 3 TB) that I wanted to backup with Veeam 9 efficiently with the specs as below:

Windows Server 2012 R2 - Data Deduplication enabled

Windows Server 2008 R2

VMware vSphere 5.1 U3

There is no need to write it to tape, so I wonder what's the new settings in VBR 9.0U1 that I can use apart from Bitlooker which I know how to enable it safely.

I also have installed new disk enclosure but have not configure the LUN yet since I wonder what's the best NTFS allocation or stripe size for best practice.

Thanks,

Can anyone here please share some backup configuration tips for large file server VM ?

I have several large VMs running file server (larger than 3 TB) that I wanted to backup with Veeam 9 efficiently with the specs as below:

Windows Server 2012 R2 - Data Deduplication enabled

Windows Server 2008 R2

VMware vSphere 5.1 U3

There is no need to write it to tape, so I wonder what's the new settings in VBR 9.0U1 that I can use apart from Bitlooker which I know how to enable it safely.

I also have installed new disk enclosure but have not configure the LUN yet since I wonder what's the best NTFS allocation or stripe size for best practice.

Thanks,

--

/* Veeam software enthusiast user & supporter ! */

/* Veeam software enthusiast user & supporter ! */

-

DaveWatkins

- Veteran

- Posts: 370

- Liked: 97 times

- Joined: Dec 13, 2015 11:33 pm

- Contact:

Re: Backup mode and setting best practice for large File ser

Personally I'd use forever forward unless you have _really_ fast disks. Running a maintenance task to defrag and compact a reverse incremental of that size would take a long time. Other than that, I'm not sure any of the new features would really benefit other than per VM chains on the repo to keep the file sizes manageable.

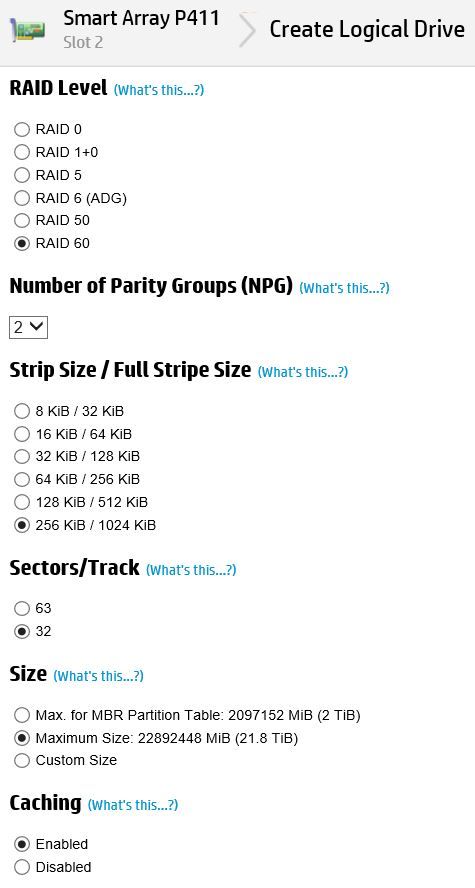

Stripe size at 256k assuming the Storage Optimization setting is set to LAN Target and allocation size of 64k, format it with /L in case you want to use dedup later when Windows 2016 comes out, 2012R2 likely wouldn't like the large files. No idea how big your repo is but if you think you'll want to enable dedup in 2016 keep the LUN sizes less that 64TB

Stripe size at 256k assuming the Storage Optimization setting is set to LAN Target and allocation size of 64k, format it with /L in case you want to use dedup later when Windows 2016 comes out, 2012R2 likely wouldn't like the large files. No idea how big your repo is but if you think you'll want to enable dedup in 2016 keep the LUN sizes less that 64TB

-

albertwt

- Veteran

- Posts: 966

- Liked: 55 times

- Joined: Nov 05, 2009 12:24 pm

- Location: Sydney, NSW

- Contact:

Re: Backup mode and setting best practice for large File ser

Thanks Dave,

In my case here the LUN size is just 32 TB. So yes, based in your suggestion I'll make the raid stripe size 256 in and the NTFS allocation unit size 64 kb.

My backup server is Windows server 2012 R2, the deduplication role is enabled just for the sake of allowing file based restore.

The VM backup job was created since version 6.5 so it's kind of old.

One backup job contains one VM only, so is it beneficial to make it per VM backup job enabled ?

In my case here the LUN size is just 32 TB. So yes, based in your suggestion I'll make the raid stripe size 256 in and the NTFS allocation unit size 64 kb.

My backup server is Windows server 2012 R2, the deduplication role is enabled just for the sake of allowing file based restore.

The VM backup job was created since version 6.5 so it's kind of old.

One backup job contains one VM only, so is it beneficial to make it per VM backup job enabled ?

--

/* Veeam software enthusiast user & supporter ! */

/* Veeam software enthusiast user & supporter ! */

-

DaveWatkins

- Veteran

- Posts: 370

- Liked: 97 times

- Joined: Dec 13, 2015 11:33 pm

- Contact:

Re: Backup mode and setting best practice for large File ser

per VM chains is a repository setting, so applies to all backups stored on the repo.

I'd be inclined to create a new job and copy all the default settings into your old job unless there is a reason not too. That way you'll pick up any new settings that will help

I'd be inclined to create a new job and copy all the default settings into your old job unless there is a reason not too. That way you'll pick up any new settings that will help

-

albertwt

- Veteran

- Posts: 966

- Liked: 55 times

- Joined: Nov 05, 2009 12:24 pm

- Location: Sydney, NSW

- Contact:

Re: Backup mode and setting best practice for large File ser

Dave,DaveWatkins wrote:per VM chains is a repository setting, so applies to all backups stored on the repo.

I'd be inclined to create a new job and copy all the default settings into your old job unless there is a reason not too. That way you'll pick up any new settings that will help

For all of the VM backup job I've double checked that it uses the Reverse Incremental backup job.

the disk is directly attached storage (DAS) HP Storage D2700 Disk Enclosure (AJ941A) 7200 rpm SATA conencted to the server via SAS conenctor, does it categorized as fast ?

--

/* Veeam software enthusiast user & supporter ! */

/* Veeam software enthusiast user & supporter ! */

-

DaveWatkins

- Veteran

- Posts: 370

- Liked: 97 times

- Joined: Dec 13, 2015 11:33 pm

- Contact:

Re: Backup mode and setting best practice for large File ser

That's about as slow as possible  . I assume you have RAID5 or RAID6 running as well (slowing them down ever more). I wouldn't be surprised if the maintenance defrag task took more than 24 hours on a backup that large. You won't know until you try obviously but that's the primary reason all my really big VM's are using forever forward still and not reverse incremental

. I assume you have RAID5 or RAID6 running as well (slowing them down ever more). I wouldn't be surprised if the maintenance defrag task took more than 24 hours on a backup that large. You won't know until you try obviously but that's the primary reason all my really big VM's are using forever forward still and not reverse incremental

-

albertwt

- Veteran

- Posts: 966

- Liked: 55 times

- Joined: Nov 05, 2009 12:24 pm

- Location: Sydney, NSW

- Contact:

Re: Backup mode and setting best practice for large File ser

DaveWatkins wrote:That's about as slow as possible. I assume you have RAID5 or RAID6 running as well (slowing them down ever more). I wouldn't be surprised if the maintenance defrag task took more than 24 hours on a backup that large. You won't know until you try obviously but that's the primary reason all my really big VM's are using forever forward still and not reverse incremental

Anyway, I will convert all of the backup job into forward incremental by editing it one by one and let it run according to the respective schedule, hopefully I do not need to initiate manual full backup.

Many thanks Dave for the sharing and assistance.

--

/* Veeam software enthusiast user & supporter ! */

/* Veeam software enthusiast user & supporter ! */

-

DaveWatkins

- Veteran

- Posts: 370

- Liked: 97 times

- Joined: Dec 13, 2015 11:33 pm

- Contact:

Re: Backup mode and setting best practice for large File ser

It'll only be a problem for large VM's, any small ones could still stay reverse.

You will have to do an active full to switch them over so it might be worth seeing how long the defrag maintenance task actually takes first, by default it only happens once a month so if you do them in the weekend it might not be an issue to miss a backup over the weekend while it happens once a month.

You will have to do an active full to switch them over so it might be worth seeing how long the defrag maintenance task actually takes first, by default it only happens once a month so if you do them in the weekend it might not be an issue to miss a backup over the weekend while it happens once a month.

-

albertwt

- Veteran

- Posts: 966

- Liked: 55 times

- Joined: Nov 05, 2009 12:24 pm

- Location: Sydney, NSW

- Contact:

Re: Backup mode and setting best practice for large File ser

Cool, many thanks once again Dave.

--

/* Veeam software enthusiast user & supporter ! */

/* Veeam software enthusiast user & supporter ! */

-

albertwt

- Veteran

- Posts: 966

- Liked: 55 times

- Joined: Nov 05, 2009 12:24 pm

- Location: Sydney, NSW

- Contact:

Re: Backup mode and setting best practice for large File ser

Hi Dave,DaveWatkins wrote:Personally I'd use forever forward unless you have _really_ fast disks. Running a maintenance task to defrag and compact a reverse incremental of that size would take a long time. Other than that, I'm not sure any of the new features would really benefit other than per VM chains on the repo to keep the file sizes manageable.

Stripe size at 256k assuming the Storage Optimization setting is set to LAN Target and allocation size of 64k, format it with /L in case you want to use dedup later when Windows 2016 comes out, 2012R2 likely wouldn't like the large files. No idea how big your repo is but if you think you'll want to enable dedup in 2016 keep the LUN sizes less that 64TB

Does the below settings looks alright for configuring it for the Veeam backup repository ?

--

/* Veeam software enthusiast user & supporter ! */

/* Veeam software enthusiast user & supporter ! */

-

antipolis

- Enthusiast

- Posts: 73

- Liked: 9 times

- Joined: Oct 26, 2016 9:17 am

- Contact:

Re: Backup mode and setting best practice for large File ser

Greetings,

As we are soon replacing our NetApp filers we will be transitionning our file shares (currently at ~8 TB) from native NetApp CIFS (with additionnal SnapMirror offsite) to a Windows Server 2016 VM that will be backed up by Veeam. I plan on expanding this virtual machine capacity little by little to meet users usage (~+30% a year)

I have a few worries here... first concerning the defrag of the backup files, but I guess having a Win2016 ReFS repository will help alot here (with synthetics and GFS)

But this thread here vmware-vsphere-f24/large-fileserver-rep ... 32983.html suggests that when adding capacity we will always face calculation of digests and fingerprinting (which will get worser and worser as the virtual machine grows...), I guess this is true for replication as well as for backup jobs and copy jobs

These points make me question the scalability of Veeam in this scenario and wondering wether I am not building a big house of cards here... an alternative would be to have virtual machines both on primary and secondary sites and just use something like DFS replication (but I also heard DFS-R also comes with its share of woes)

any feedback or returns of experience would be very appreciated !

regards

As we are soon replacing our NetApp filers we will be transitionning our file shares (currently at ~8 TB) from native NetApp CIFS (with additionnal SnapMirror offsite) to a Windows Server 2016 VM that will be backed up by Veeam. I plan on expanding this virtual machine capacity little by little to meet users usage (~+30% a year)

I have a few worries here... first concerning the defrag of the backup files, but I guess having a Win2016 ReFS repository will help alot here (with synthetics and GFS)

But this thread here vmware-vsphere-f24/large-fileserver-rep ... 32983.html suggests that when adding capacity we will always face calculation of digests and fingerprinting (which will get worser and worser as the virtual machine grows...), I guess this is true for replication as well as for backup jobs and copy jobs

These points make me question the scalability of Veeam in this scenario and wondering wether I am not building a big house of cards here... an alternative would be to have virtual machines both on primary and secondary sites and just use something like DFS replication (but I also heard DFS-R also comes with its share of woes)

any feedback or returns of experience would be very appreciated !

regards

-

antipolis

- Enthusiast

- Posts: 73

- Liked: 9 times

- Joined: Oct 26, 2016 9:17 am

- Contact:

Who is online

Users browsing this forum: Google [Bot] and 32 guests