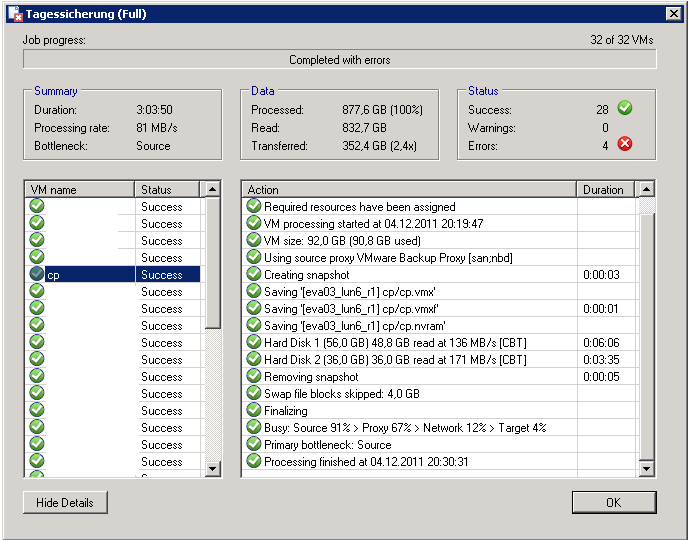

we are using VBR6 with vSphere 5 in a Direct SAN access environment (one VBR server with all "roles" instlaled) and I am wondering that CBT transfer rates for Reversed Incremental backups seem to be much slower than transfer rates for Full Backups. In example, the following screenshot shows a full backup for a given virtual machine which runs at about 130-170MB/s:

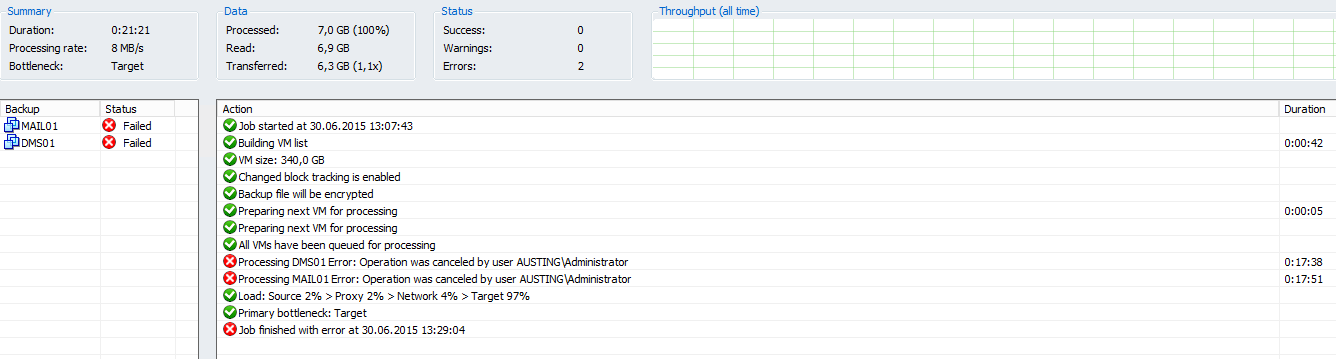

The subsequent (so it is incremental) backup however seems to be much slower with only 15-35MB/s:

It was the same with VBR5. However, shouldn't the incremental backups run at the same rate as the full backup? Or is this due to the fact that incremental backups generate more load on the target server since the backups need to be injected into the existing VBK file? At least this is what the bottlenack indicator suggests and whould be logical.

And: Why do the realtime statiscs for the full backup (screenshot 1) show CBT as well? I thought CBT is only used for incremental backups?

Thanks

Michael