I would like to know how I can size correctly my customer infrastructure.

Total VM : arround 400

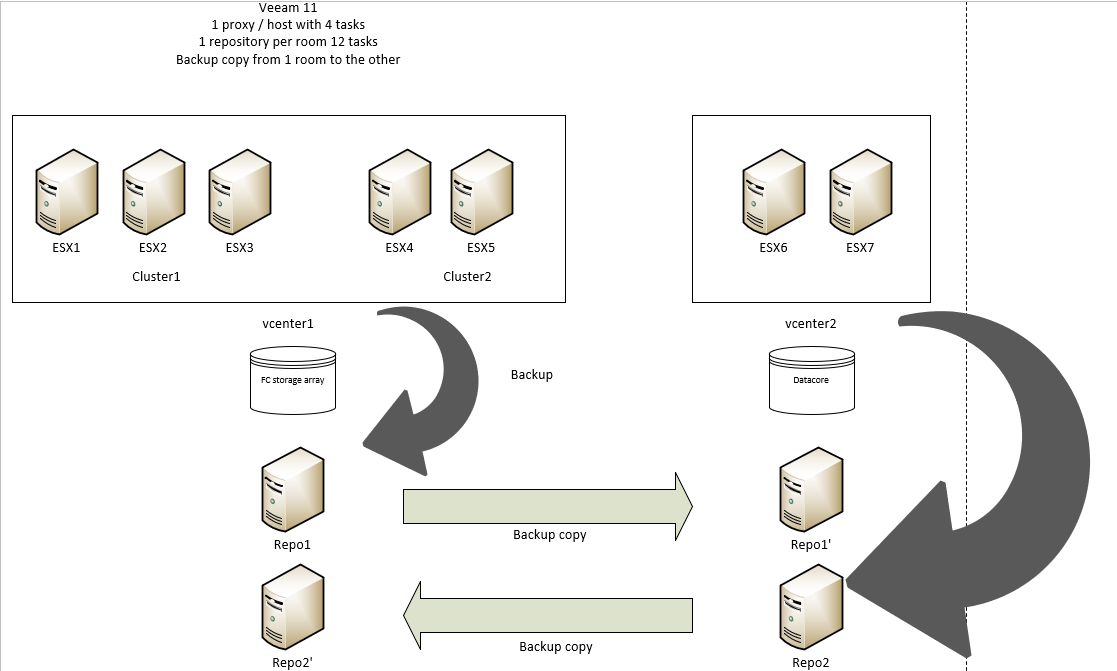

There is 2 different room (1 and 2).

On room 1 there is VBR (on VM) + 3 ESX to save.

On room 2 there is 2 ESX + 2 other ESX.

There is 2 vcenter : 1 with 3 (on cluster1) + 2 remote (cluster B) and an other vcenter for 2 other.

The 5 host in the same vcenter use the same storage array

The 2 others ESX are on dedicated storage (datacore).

Room 1 is backuped on Hardened linux repository with 12 task

Room 2 is backup on second hardened linux repository with 12 task.

I use NBD as transport mode because network is 10Gb.

I installed 1 proxy / ESX with 4 task for each one

On each backup, my bottleneck is the source (99%) and then my target with 60%.

Backup is arround 400MB/s processing and sometimes it's only 70MB/s probably because there is some process on the VM external to the backup.

I have backup copy job with mirroring but it doesn't start until my backup job finish.

I would like to know :

How could I "improve" performance with task number on proxy and repository ? I choose them randomly here.

Do I have to use different schedule for all my backup job ? Actually, they all start at the same time.

When veeam is backuping my vcenter, it can't backup any other VM because it can't connect to vcenter to process them (I suppose) I have error about licence ([Error Unhandled exception was thrown during licensing process]) and this error was only for few VM and then all other are working perfectly.

I use per vm backup job.

I can give all the details you need, I just would like to understand how can I maximize performance for this customer.

Thanks for your help.