-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

BTW, I've opened up a defect report on the ZFS forums as I can simulate a deadlock at the file system layer on tin (removing Veeam and VMware from the mix).

I suspect some of the issues I'm having may be related to certain load patterns when dealing with many large files with a heavy degree of cloned blocks in - certainly form my testing it doesn't take too many large file deletes to cause a ZFS deadlock which can last for up up to 30 minutes or so. If anyone is interested; https://github.com/openzfs/zfs/issues/16680

My guess at this stage is that the deadlocks are occurring randomly and causing timeouts at the synthetic backup creation and leading to the errors in Veeam I'm seeing.

I suspect some of the issues I'm having may be related to certain load patterns when dealing with many large files with a heavy degree of cloned blocks in - certainly form my testing it doesn't take too many large file deletes to cause a ZFS deadlock which can last for up up to 30 minutes or so. If anyone is interested; https://github.com/openzfs/zfs/issues/16680

My guess at this stage is that the deadlocks are occurring randomly and causing timeouts at the synthetic backup creation and leading to the errors in Veeam I'm seeing.

-

HannesK

- Product Manager

- Posts: 15896

- Liked: 3564 times

- Joined: Sep 01, 2014 11:46 am

- Full Name: Hannes Kasparick

- Location: Austria

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

thanks for sharing!

-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

For anyone interested in experimenting with zfs with large Veeam backup workloads etc, I recommend for now disabling synthetic full backups, disabling GFS on copy jobs, and switching to regular active full backups (which are obviously space intensive but are fast and reliable - depending on your zpool/vdev configuration).

-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

For anyone still following this, I've been running many tests under 2.3 RC3 and have the following conclusions.

- The message "Agent: Failed to process method {Transform.CompileFIB}: Resource temporarily unavailable" still occurs when synthetics are enabled and block cloning is enabled on ZFS.

- There is no currently agreed root cause as to which side of the fence the issue exists, but Veeam suspects this is related to OpenZFS. However using the OpenZFS block cloning reliability tests I've been unable to isolate the issue to OpenZFS.

- The following doesn't eliminate the errors but seems to impact at which stage in the job they occur.

-> Change blocksize from 4MB to 8MB (or even downwards).

-> Changing compression level at a job level from optimal to none.

-> Reducing the number of concurrent jobs hitting the repository.

-> The number of jobs hitting the repositories simultaneously in the transformation stage does not appear to correlate to the failures.

- There appear to be long standing issues with synthetic backups and the load they place on the target storage device even when block cloning is in use, so even on commercial solutions many people seem to run without synthetic solutions.

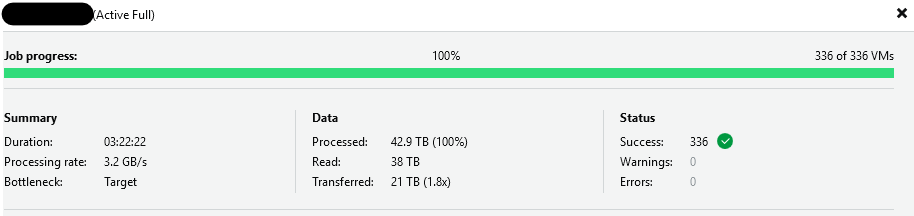

- As a backup target with synthetic backups disabled, an OpenZFS appliance (in our case running Rocky9 on commodity tin with a standard HBA controller, with 23 spindles split over 4 vdevs) can easily saturate a 10gb link throughout the entire period of an Active Full backup, so we are in the process of upgrading our interconnects to 25gb.

- I'm working with Veeam and OpenZFS team on figuring out the best way of moving forwards with this. (thanks to @hannesk).

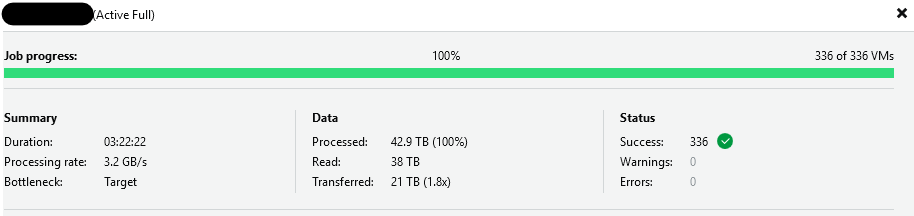

- This is how active fulls are looking like with this configuration which appears to be a health throughput;

- The message "Agent: Failed to process method {Transform.CompileFIB}: Resource temporarily unavailable" still occurs when synthetics are enabled and block cloning is enabled on ZFS.

- There is no currently agreed root cause as to which side of the fence the issue exists, but Veeam suspects this is related to OpenZFS. However using the OpenZFS block cloning reliability tests I've been unable to isolate the issue to OpenZFS.

- The following doesn't eliminate the errors but seems to impact at which stage in the job they occur.

-> Change blocksize from 4MB to 8MB (or even downwards).

-> Changing compression level at a job level from optimal to none.

-> Reducing the number of concurrent jobs hitting the repository.

-> The number of jobs hitting the repositories simultaneously in the transformation stage does not appear to correlate to the failures.

- There appear to be long standing issues with synthetic backups and the load they place on the target storage device even when block cloning is in use, so even on commercial solutions many people seem to run without synthetic solutions.

- As a backup target with synthetic backups disabled, an OpenZFS appliance (in our case running Rocky9 on commodity tin with a standard HBA controller, with 23 spindles split over 4 vdevs) can easily saturate a 10gb link throughout the entire period of an Active Full backup, so we are in the process of upgrading our interconnects to 25gb.

- I'm working with Veeam and OpenZFS team on figuring out the best way of moving forwards with this. (thanks to @hannesk).

- This is how active fulls are looking like with this configuration which appears to be a health throughput;

-

HannesK

- Product Manager

- Posts: 15896

- Liked: 3564 times

- Joined: Sep 01, 2014 11:46 am

- Full Name: Hannes Kasparick

- Location: Austria

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

Hello,

thank you Ashley for doing all the tests and working with the OpenZFS team. The ZFS team is working on improvements.

From Veeam side, ZFS will stay unsupported for now.

Best regards

Hannes

thank you Ashley for doing all the tests and working with the OpenZFS team. The ZFS team is working on improvements.

From Veeam side, ZFS will stay unsupported for now.

Best regards

Hannes

-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

for anyone following this, there has been a breakthrough over the last week.

The OpenZFS team have made a number of changes/improvements to the block cloning logic in OpenZFS.

This is currently targeted for 2.3RC4 - which hasn't been tagged yet as such, so it's likely to be quite a while before it hits the standard package repos, so the only way of accessing this currently is to build OpenZFS from source.

The other change required is the parameter "zfs_bclone_wait_dirty" needs to be set to 1, otherwise the load patterns of Veeam synthetic fulls can trigger the old message "Agent: Failed to process method {Transform.CompileFIB}: Resource temporarily unavailable"

https://openzfs.github.io/openzfs-docs/ ... wait_dirty

We noticed another issue in that even when we set the ZFS module parameters like the following;

after a reboot, the setting was still zero.

so to currently persist the behavior to set it to 1 require the setting - and the simplest way I could find in Rocky9 was to use @reboot option in crontab;

We ran a number of tests and were unable to get a failure on a synthetic job. and the stats are looking great;

Big shout out to Hannes/OpenZFS team - especially Alex.

ZFS rocks!

The OpenZFS team have made a number of changes/improvements to the block cloning logic in OpenZFS.

This is currently targeted for 2.3RC4 - which hasn't been tagged yet as such, so it's likely to be quite a while before it hits the standard package repos, so the only way of accessing this currently is to build OpenZFS from source.

The other change required is the parameter "zfs_bclone_wait_dirty" needs to be set to 1, otherwise the load patterns of Veeam synthetic fulls can trigger the old message "Agent: Failed to process method {Transform.CompileFIB}: Resource temporarily unavailable"

https://openzfs.github.io/openzfs-docs/ ... wait_dirty

We noticed another issue in that even when we set the ZFS module parameters like the following;

Code: Select all

# vi /etc/modprobe.d/zfs.conf

options zfs zfs_bclone_enabled=1

options zfs zfs_bclone_wait_dirty=1

Code: Select all

# cat /sys/module/zfs/parameters/zfs_bclone_wait_dirty

0

Code: Select all

# crontab -u root -e

@reboot echo 1 > /sys/module/zfs/parameters/zfs_bclone_wait_dirty

Code: Select all

# zpool get all |grep clone

VeeamBackup bcloneused 18.6T -

VeeamBackup bclonesaved 18.6T -

VeeamBackup bcloneratio 2.00x -

Big shout out to Hannes/OpenZFS team - especially Alex.

ZFS rocks!

-

Gostev

- former Chief Product Officer (until 2026)

- Posts: 33084

- Liked: 8179 times

- Joined: Jan 01, 2006 1:01 am

- Location: Baar, Switzerland

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

@ashleyw thank you for keeping us posted. Please let us know once this version hits the standard package repos, as if you're still confident with its long term reliability then, I guess it will be a good time for me to ask QA to perform full regression testing on their end so we could remove the experimental support clause from this integration.

My only concern if making this a part of standard package repos takes too long, then we will be already quite close to V13 and QA will be rightfully refusing any and all unplanned tasks. But in that case may be I can agree with them to move only a few customers like yourself off of experimental support, by marking your account accordingly in the Customer Support database.

My only concern if making this a part of standard package repos takes too long, then we will be already quite close to V13 and QA will be rightfully refusing any and all unplanned tasks. But in that case may be I can agree with them to move only a few customers like yourself off of experimental support, by marking your account accordingly in the Customer Support database.

-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

@Gostev, thanks for that. I believe originally that the OpenZFS team were hoping to ship 2.3 before fall of 2024, but in view of the block cloning optimisations and other functionality currently being worked on, I wouldn't want to speculate on a planned date for 2.3 to ship and from where I'm sitting there is likely to be a 2.3 rc4 prior to the final release so I my gut feel is quarter 1 2025, but Hannes could potentially ask iXsystems as to their view.

So far we have hit no issues with 2.3 rc3 provided that the appropriate tuning parameters are used as described in this thread.

The only issues now are that our Veeam backups now run too fast with too much reliability

Within our company I represent the development services we supply to our own commercial development teams, so we are often able to take a slightly more experimental approach than our external customer centric divisions - and often this allows us to test out innovative approaches well before it hits our wider group.

So it would be fantastic if 2.3 could be planned to be supported by Veeam at some stage in the future, but the "experimental" tag doesn't really impact us directly at this stage as long as we can occasionally continue some technical dialogue from time to time with Hannes and the OpenZFS team.

It really is refreshing to see how dedicated Veeam is towards supporting their customers and helping to drive innovation - so massive thanks to all.

So far we have hit no issues with 2.3 rc3 provided that the appropriate tuning parameters are used as described in this thread.

The only issues now are that our Veeam backups now run too fast with too much reliability

Within our company I represent the development services we supply to our own commercial development teams, so we are often able to take a slightly more experimental approach than our external customer centric divisions - and often this allows us to test out innovative approaches well before it hits our wider group.

So it would be fantastic if 2.3 could be planned to be supported by Veeam at some stage in the future, but the "experimental" tag doesn't really impact us directly at this stage as long as we can occasionally continue some technical dialogue from time to time with Hannes and the OpenZFS team.

It really is refreshing to see how dedicated Veeam is towards supporting their customers and helping to drive innovation - so massive thanks to all.

-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

OpenZFS 2.3rc4 has just been released - I believe this is likely the last RC release before the 2.3 final version is released sometime next year.

We have had no issues running 2.3rc3, but 2.3rc4 introduced some further optimisations around block cloning, hence the reason we are rolling with it now rather than waiting.

The approach we took to get this up and running on rocky9 was the following;

We have had no issues running 2.3rc3, but 2.3rc4 introduced some further optimisations around block cloning, hence the reason we are rolling with it now rather than waiting.

The approach we took to get this up and running on rocky9 was the following;

Code: Select all

# dnf config-manager --set-enabled crb

# dnf install --skip-broken epel-release gcc make autoconf automake libtool rpm-build kernel-rpm-macros libtirpc-devel libblkid-devel libuuid-devel libudev-devel openssl-devel zlib-devel libaio-devel libattr-devel elfutils-libelf-devel kernel-devel-$(uname -r) kernel-abi-stablelists-$(uname -r | sed 's/\.[^.]\+$//') python3 python3-devel python3-setuptools python3-cffi libffi-devel

# dnf install --skip-broken --enablerepo=epel python3-packaging dkms

# cd /root

# wget https://github.com/openzfs/zfs/releases/download/zfs-2.3.0-rc4/zfs-2.3.0-rc4.tar.gz

# tar xvf zfs-2.3.0-rc4.tar.gz

# cd zfs-2.3.0-rc4

# sh autogen.sh

# ./configure

# make -j1 rpm-utils rpm-dkms

# yum localinstall *.$(uname -p).rpm *.noarch.rpm

# reboot

# zfs version

zfs-2.3.0-rc4

zfs-kmod-2.3.0-rc4

-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

for anyone interested. ZFS 2.3 release has hit the official testing repositories earlier this week - at least for Rocky9.5.

One of the new features of ZFS is called DirectIO - sadly the default DirectIO settings breaks the Veeam integration.

Veeam is aware of this. https://github.com/openzfs/zfs/issues/16953

As I understand, DirectIO is designed for very fast NVMe based systems to bypass the ZFS L2ARC (Cache) - the reasoning is that pushing the data through the cache may slow throughput.

However, vast majority of backup infrastructure is likely to use traditional disks and the workload patterns of Veeam will likely negate any benefits.

However, its easy to work around this by disabling DirectIO until which stage as upstream changes can be made to Veeam/ZFS.

The process below is now the process we are using on all our ZFS based SANs used for Veeam.

1. Install ZFS from testing repositories as per standard ZFS install and configure ZFS parameters required for Veeam integration

2. Enable Veeam detection for block cloning

3. Check ZFS parameter values - they should be exactly as below

4. If values are not as above, or the versions of zfs and zfs-mod differ (from the zfs verison command), then persist values by setting values and then recreate the initramfs file

5. Identify block devices

6. Create zpool and disable sync and atime for performance

7. Dial in repository into Veeam UI.

8. Sit back and be amazed by the goodness of OpenZFS!

In our case one of our Dell PowerEdge servers has 24x16TB SAS block devices, and these appear as sda through sdx (with the OS booting from a separate NVMe device) so we leverage striping across multiple vdevs to improve performance.

We also disable Native Command Queuing NCQ on the drives to further improve throughput.

One of the new features of ZFS is called DirectIO - sadly the default DirectIO settings breaks the Veeam integration.

Veeam is aware of this. https://github.com/openzfs/zfs/issues/16953

As I understand, DirectIO is designed for very fast NVMe based systems to bypass the ZFS L2ARC (Cache) - the reasoning is that pushing the data through the cache may slow throughput.

However, vast majority of backup infrastructure is likely to use traditional disks and the workload patterns of Veeam will likely negate any benefits.

However, its easy to work around this by disabling DirectIO until which stage as upstream changes can be made to Veeam/ZFS.

The process below is now the process we are using on all our ZFS based SANs used for Veeam.

1. Install ZFS from testing repositories as per standard ZFS install and configure ZFS parameters required for Veeam integration

Code: Select all

# dnf install https://zfsonlinux.org/epel/zfs-release-2-3$(rpm --eval "%{dist}").noarch.rpm

# dnf install -y epel-release

# dnf install -y kernel-devel

# dnf config-manager --enable zfs-testing

# dnf install zfs

# zfs version

zfs-2.3.0-1

zfs-kmod-2.3.0-1

# vi /etc/modprobe.d/zfs.conf

options zfs zfs_bclone_enabled=1 zfs_bclone_wait_dirty=1 zfs_dio_enabled=0

# reboot

Code: Select all

# mkdir /etc/veeam

# touch /etc/veeam/EnableZFSBlockCloning

Code: Select all

# cat /sys/module/zfs/parameters/zfs_bclone_enabled

1

# cat /sys/module/zfs/parameters/zfs_bclone_wait_dirty

1

# cat /sys/module/zfs/parameters/zfs_dio_enabled

0

Code: Select all

# cd /boot

# echo 1 >/sys/module/zfs/parameters/zfs_bclone_enabled

# echo 1 >/sys/module/zfs/parameters/zfs_bclone_wait_dirty

# echo 0 >/sys/module/zfs/parameters/zfs_dio_enabled

# dracut -f initramfs-$(uname -r).img $(uname -r)

# reboot

Code: Select all

# lsblk -d

Code: Select all

# zpool create VeeamBackup /dev/sdc

# zfs set sync=disabled VeeamBackup

# zfs set atime=off VeeamBackup

8. Sit back and be amazed by the goodness of OpenZFS!

In our case one of our Dell PowerEdge servers has 24x16TB SAS block devices, and these appear as sda through sdx (with the OS booting from a separate NVMe device) so we leverage striping across multiple vdevs to improve performance.

We also disable Native Command Queuing NCQ on the drives to further improve throughput.

Code: Select all

# for drive in sd{a..x};do

# echo 1 > /sys/block/$drive/device/queue_depth

# done

# zpool create VeeamBackup raidz1 sda sdb sdc sdd sde sdf -f

# zpool add VeeamBackup raidz1 sdg sdh sdi sdj sdk sdl -f

# zpool add VeeamBackup raidz1 sdm sdn sdo sdp sdq sdr -f

# zpool add Veeam Backup raidz1 sds sdt sdu sdv sdw -f

# zpool add VeeamBackup spare sdx -f

# zfs set sync=disabled VeeamBackup

# zfs set atime=off VeeamBackup

-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

Just a quick follow up for people who don't have time to trawl the GitHub updates.

The current discussion is going on here; https://github.com/openzfs/zfs/pull/16972 and is referring to DirectIO and block alignment - this appears to be the main outstanding issue with Veeam and OpenZFS integration.

I'm assuming that once the change reaches the OpenZFS testing repositories (some weeks away I guess), some testing will be required to ensure Veeam behaves as expected without having to disable DirectIO.

If DirectIO is disabled as per the zfs_dio_enabled parameter above, this situation is avoided until a permanent fix by the OpenZFS team has been released.

On our Dell PowerEdge servers running OpenZFS on Rocky9, we continue to see excellent performance (based on the configuration above), and have had no reliability issues whatsoever.

The current discussion is going on here; https://github.com/openzfs/zfs/pull/16972 and is referring to DirectIO and block alignment - this appears to be the main outstanding issue with Veeam and OpenZFS integration.

I'm assuming that once the change reaches the OpenZFS testing repositories (some weeks away I guess), some testing will be required to ensure Veeam behaves as expected without having to disable DirectIO.

If DirectIO is disabled as per the zfs_dio_enabled parameter above, this situation is avoided until a permanent fix by the OpenZFS team has been released.

On our Dell PowerEdge servers running OpenZFS on Rocky9, we continue to see excellent performance (based on the configuration above), and have had no reliability issues whatsoever.

-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

OpenZFS 2.3.2 has just been released. Looking at the changelist on GitHub it contains "Linux/vnops: implement STATX_DIOALIGN.", so the necessary changes are now within the release.

I've just updated two of our Rocky9 servers without issues.

It would be great if @Hannesk could get the Veeam dev team to take a look at some stage! thanks.

I've just updated two of our Rocky9 servers without issues.

It would be great if @Hannesk could get the Veeam dev team to take a look at some stage! thanks.

-

HannesK

- Product Manager

- Posts: 15896

- Liked: 3564 times

- Joined: Sep 01, 2014 11:46 am

- Full Name: Hannes Kasparick

- Location: Austria

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

yes thanks, I saw the news and tested the V13 software appliance with ZFS 2.3.2. It works out of the box now without disabling direct_IO / switching to the legacy data mover option on Veeam side.

Just by chance, I was also able to reproduce "your" error last week. With only two VMs (one 10TB VM and one 1TB VM) in two jobs that are reflinking at the same time I got "your" error:

I sent over the details to the ZFS team and they want to look into it.

While you got it stable by changing ZFS settings, we need to ensure that default settings work fine.

Just by chance, I was also able to reproduce "your" error last week. With only two VMs (one 10TB VM and one 1TB VM) in two jobs that are reflinking at the same time I got "your" error:

Code: Select all

7:35:32 AM Synthetic full backup creation failed Error: Agent: Failed to process method {Transform.CompileFIB}: Resource temporarily unavailable Failed to clone file extent. Source offset: 1835008, target offset: 1835008, length: 131072

7:35:34 AM Agent: Failed to process method {Transform.CompileFIB}: Resource temporarily unavailable Failed to clone file extent. Source offset: 1835008, target offset: 1835008, length: 131072

While you got it stable by changing ZFS settings, we need to ensure that default settings work fine.

-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

That's great Hannes. Hopefully they'll be able to figure out the behavior causing the issue from the default of "zfs_bclone_wait_dirty=0".

I remember at the time thinking zfs always has data integrity as it's number one objective so I couldn't understand why the default wouldn't be 1 as every write or clone operation should always reliably succeed regardless of the IO pattern being generated by the application.

zfs_bclone_wait_dirty=0|1 (int)

When set to 1 the FICLONE and FICLONERANGE ioctls wait for dirty data to be written to disk. This allows the clone operation to reliably succeed when a file is modified and then immediately cloned. For small files this may be slower than making a copy of the file. Therefore, this setting defaults to 0 which causes a clone operation to immediately fail when encountering a dirty block.

I remember at the time thinking zfs always has data integrity as it's number one objective so I couldn't understand why the default wouldn't be 1 as every write or clone operation should always reliably succeed regardless of the IO pattern being generated by the application.

zfs_bclone_wait_dirty=0|1 (int)

When set to 1 the FICLONE and FICLONERANGE ioctls wait for dirty data to be written to disk. This allows the clone operation to reliably succeed when a file is modified and then immediately cloned. For small files this may be slower than making a copy of the file. Therefore, this setting defaults to 0 which causes a clone operation to immediately fail when encountering a dirty block.

-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

Have there been any changes in ZFS v2.3.3 (that was released yesterday), that help with the Veeam reliability specifically with regards to having to set the zfs_bclone_wait_dirty parameter?

@HannesK Is there a public GtiHub issue where the outstanding Veeam/ZFS issues are being discussed or is it private?

We continue to have excellent throughput and reliability and have finally been able to upgrade our primary devices to use 25GbE (Just our replica and lab machines which I'm trying to work through the politics as those are still on 10GbE).

@HannesK Is there a public GtiHub issue where the outstanding Veeam/ZFS issues are being discussed or is it private?

We continue to have excellent throughput and reliability and have finally been able to upgrade our primary devices to use 25GbE (Just our replica and lab machines which I'm trying to work through the politics as those are still on 10GbE).

-

HannesK

- Product Manager

- Posts: 15896

- Liked: 3564 times

- Joined: Sep 01, 2014 11:46 am

- Full Name: Hannes Kasparick

- Location: Austria

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

Hello,

I'm not aware of any changes relevant for reflink / Veeam.

The conversation about improving things happens directly between iXsystems / TrueNAS and myself because I only have TrueNAS at a decent size in my lab to reproduce the issue. With my virtual ZFS repositories based with much smaller VMs, I could not reproduce the problem.

Technically you could probably also open a github conversation where you reproduce the problem and describe that you fix it by changing the setting from above.

Best regards

Hannes

I'm not aware of any changes relevant for reflink / Veeam.

The conversation about improving things happens directly between iXsystems / TrueNAS and myself because I only have TrueNAS at a decent size in my lab to reproduce the issue. With my virtual ZFS repositories based with much smaller VMs, I could not reproduce the problem.

Technically you could probably also open a github conversation where you reproduce the problem and describe that you fix it by changing the setting from above.

Best regards

Hannes

-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

Thanks, Hannes,

I'll reach out to you privately to discuss the best way of engaging Alexander Motin if you consider it worthwhile (as there is a working configuration that we use listed below).

The problem configurations are around zfs_bclone_wait_dirty and zfs_dio_enabled (see below).

I've upgraded all our backup targets to OpenZFS 2.3.3, and have summarised the current configurations and the results form our jobs.

All tests run by forcing synthetic full;

Force synthetic full on each run and set schedule to run synthetic full on job every day of week.

HKEY_LOCAL_MACHINE\SOFTWARE\Veeam\Veeam Backup and Replication\ForceTransform

Type: REG_DWORD (0 False, 1 True)

Default value: 0 (disabled)

Description: When set to 1, will trigger synthetic full on every job run

1. Test with known working parameters

* zfs_bclone_enabled=1 zfs_bclone_wait_dirty=1 zfs_dio_enabled=0

* WORKING CONFIG

all works fine.

2. Test with zfs_dio_enabled=1

* zfs_bclone_enabled=1 zfs_bclone_wait_dirty=1 zfs_dio_enabled=1

* FAIL: Immediately

Immediately fails with

24/06/2025 9:14:00 am :: Processing d-kelvinn-vdi Error: Invalid argument

Asynchronous request operation has failed. [size = 524288] [offset = 4096] [bufaddr = 0x00007f8461a5d200] [res = -22; 0]

Failed to open storage for read/write access. Storage: [/VeeamBackup/test/testvm.vm-495323D2025-06-24T091336_2C96.vib].

3. Test with zfs_bclone_wait_dirty=0

*zfs_bclone_enabled=1 zfs_bclone_wait_dirty=0 zfs_dio_enabled=0

FAIL: 7 minutes into job.

24/06/2025 12:13:33 pm :: Agent: Failed to process method {Transform.CompileFIB}: Resource temporarily unavailable

Failed to clone file extent. Source offset: 2359296, target offset: 19660800, length: 524288

I'll reach out to you privately to discuss the best way of engaging Alexander Motin if you consider it worthwhile (as there is a working configuration that we use listed below).

The problem configurations are around zfs_bclone_wait_dirty and zfs_dio_enabled (see below).

I've upgraded all our backup targets to OpenZFS 2.3.3, and have summarised the current configurations and the results form our jobs.

All tests run by forcing synthetic full;

Force synthetic full on each run and set schedule to run synthetic full on job every day of week.

HKEY_LOCAL_MACHINE\SOFTWARE\Veeam\Veeam Backup and Replication\ForceTransform

Type: REG_DWORD (0 False, 1 True)

Default value: 0 (disabled)

Description: When set to 1, will trigger synthetic full on every job run

1. Test with known working parameters

* zfs_bclone_enabled=1 zfs_bclone_wait_dirty=1 zfs_dio_enabled=0

* WORKING CONFIG

Code: Select all

echo "options zfs zfs_bclone_enabled=1 zfs_bclone_wait_dirty=1 zfs_dio_enabled=0" > /etc/modprobe.d/zfs.conf

dracut -f initramfs-$(uname -r).img $(uname -r)

reboot

echo "zfs_bclone_enabled:`cat /sys/module/zfs/parameters/zfs_bclone_enabled`"

echo "zfs_bclone_wait_dirty:`cat /sys/module/zfs/parameters/zfs_bclone_wait_dirty`"

echo "zfs_dio_enabled:`cat /sys/module/zfs/parameters/zfs_dio_enabled`"

zfs_bclone_enabled:1

zfs_bclone_wait_dirty:1

zfs_dio_enabled:0

2. Test with zfs_dio_enabled=1

* zfs_bclone_enabled=1 zfs_bclone_wait_dirty=1 zfs_dio_enabled=1

* FAIL: Immediately

Code: Select all

echo "options zfs zfs_bclone_enabled=1 zfs_bclone_wait_dirty=1 zfs_dio_enabled=1" > /etc/modprobe.d/zfs.conf

dracut -f initramfs-$(uname -r).img $(uname -r)

reboot

echo "zfs_bclone_enabled:`cat /sys/module/zfs/parameters/zfs_bclone_enabled`"

echo "zfs_bclone_wait_dirty:`cat /sys/module/zfs/parameters/zfs_bclone_wait_dirty`"

echo "zfs_dio_enabled:`cat /sys/module/zfs/parameters/zfs_dio_enabled`"

zfs_bclone_enabled:1

zfs_bclone_wait_dirty:1

zfs_dio_enabled:1

24/06/2025 9:14:00 am :: Processing d-kelvinn-vdi Error: Invalid argument

Asynchronous request operation has failed. [size = 524288] [offset = 4096] [bufaddr = 0x00007f8461a5d200] [res = -22; 0]

Failed to open storage for read/write access. Storage: [/VeeamBackup/test/testvm.vm-495323D2025-06-24T091336_2C96.vib].

3. Test with zfs_bclone_wait_dirty=0

*zfs_bclone_enabled=1 zfs_bclone_wait_dirty=0 zfs_dio_enabled=0

FAIL: 7 minutes into job.

Code: Select all

echo "options zfs zfs_bclone_enabled=1 zfs_bclone_wait_dirty=0 zfs_dio_enabled=0" > /etc/modprobe.d/zfs.conf

dracut -f initramfs-$(uname -r).img $(uname -r)

reboot

echo "zfs_bclone_enabled:`cat /sys/module/zfs/parameters/zfs_bclone_enabled`"

echo "zfs_bclone_wait_dirty:`cat /sys/module/zfs/parameters/zfs_bclone_wait_dirty`"

echo "zfs_dio_enabled:`cat /sys/module/zfs/parameters/zfs_dio_enabled`"

zfs_bclone_enabled:1

zfs_bclone_wait_dirty:0

zfs_dio_enabled:0

Failed to clone file extent. Source offset: 2359296, target offset: 19660800, length: 524288

-

6equj5

- Novice

- Posts: 7

- Liked: 1 time

- Joined: Mar 03, 2014 6:01 am

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

1. Test with known working parameters

* zfs_bclone_enabled=1 zfs_bclone_wait_dirty=1 zfs_dio_enabled=0

* WORKING CONFIG

Code: Select all

zfs-2.3.3-pve1

zfs-kmod-2.3.3-pve1-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

I'm not sure how you are testing or but are you able to confirm these 3 variables are as follows after a boot, as Proxmox ZFS kernel parameters may be set differently after a reboot or a Proxmox upgrade - due to the appliance design of Proxmox. Also, have you forced a synthetic full on each full run with the registry entry of "HKEY_LOCAL_MACHINE\SOFTWARE\Veeam\Veeam Backup and Replication\ForceTransform" as mentioned previouslyThis works also on PVE 9 but ... it's utilizing fast clone pretty randomly. Most VMs are just "normal" merged (slow). Are there any constraints on the chain maybe?

All my tests were on Rocky9 to give me complete control of the tin.

I do use Proxmox in home/lab perspective but not as a backup target to Veeam.

Code: Select all

echo "zfs_bclone_enabled:`cat /sys/module/zfs/parameters/zfs_bclone_enabled`"

echo "zfs_bclone_wait_dirty:`cat /sys/module/zfs/parameters/zfs_bclone_wait_dirty`"

echo "zfs_dio_enabled:`cat /sys/module/zfs/parameters/zfs_dio_enabled`"

zfs_bclone_enabled:1

zfs_bclone_wait_dirty:1

zfs_dio_enabled:0

Looking quickly at the release notes, there is one change that defaults the bclone_wait_dirty to 1 now (hooray!); https://github.com/openzfs/zfs/pull/17455

OpenZFS is looking better than ever!

(By the way the latest version of Proxmox PVE 9.0.6 is still seems to be tracking a slightly earlier version for now - 2.3.3)

Code: Select all

zfs --version

zfs-2.3.4-1

zfs-kmod-2.3.4-1

-

HannesK

- Product Manager

- Posts: 15896

- Liked: 3564 times

- Joined: Sep 01, 2014 11:46 am

- Full Name: Hannes Kasparick

- Location: Austria

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

"most" sounds strange. I can only guess, that the backup files where copied manually somehow and if that guess is correct, then using "move backup" to another repository and back should re-enable fastclone / reflink for those machines.Most VMs are just "normal" merged (slow). Are there any constraints on the chain maybe?

In general it sounds odd to me to use a hypervisor host as a repository. Sounds like the worst option for something that is unsupported anyway...

that's good news. I would assume that ZFS works out of the box with default settings once Veeam Backup & Replication V13 ships. Because V13 will have the block alignment fix which means zfs_dio_enabled is not needed anymore.Looking quickly at the release notes, there is one change that defaults the bclone_wait_dirty to 1 now (hooray!);

this was only for testing to stress out the file system as much as possible and see whether it's stable. It should not be used in general. That is a "lab only" settingAlso, have you forced a synthetic full on each full run

-

Gostev

- former Chief Product Officer (until 2026)

- Posts: 33084

- Liked: 8179 times

- Joined: Jan 01, 2006 1:01 am

- Location: Baar, Switzerland

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

@ashleyw @HannesK could you help me understand the latest and greatest status with ZFS block cloning (it's important that you both agree to it). Are we still waiting for some fixes or defaults changes to be done, or it's finally the time to get our QA on this beast after we ship 13.0.1 to see if we can have this officially supported? Thanks

-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

Sorry for the length of this post the answer on support of OpenZFS is complex and there is no real official view without consulting lawyers.

My understanding is as follows;

Based on information here;

https://openzfs.github.io/openzfs-docs/ ... .html#dkms

https://openzfs.github.io/openzfs-docs/ ... positories

I've run a variety of tests (see below) and my summary is;

- Not using the testing repository results in version 2.2.8 branch of OpenZFS. By default this does not have block cloning enabled nor block clone wait dirty enabled. The 2.2.8 branch does not support direct IO (DIO). It can be made to work reliably in for Veeam by setting both zfs_bclone_enabled and zfs_bclone_wait_dirty to 1.

- Using the latest testing repository results in version 2.3.4 of OpenZFS. By default zfs_bclone_enabled, zfs_bclone_wait_dirty and zfs_dio_enabled are all set to 1. Having zfs_dio_enabled=1 breaks Veeam V12 integration. Hannes said this particular issue has been resolved in V13.

- If OpenZFS support was elevated in Veeam, it would need to determine a minimum supported version of OpenZFS. If it was say 2.2.4 the following checks would be needed;

1. If target is Veeam V12 and OpenZFS version is >=2.2.4 and <2.3

/sys/module/zfs/parameters/zfs_bclone_enabled=1

/sys/module/zfs/parameters/zfs_bclone_wait_dirty=1

2. If target is Veeam V12 and OpenZFS version is >=2.3

/sys/module/zfs/parameters/zfs_bclone_enabled=1

/sys/module/zfs/parameters/zfs_bclone_wait_dirty=1

/sys/module/zfs/parameters/zfs_dio_enabled=0

3. If target is Veeam V13 and OpenZFS version is >=2.2.4 and <2.3

/sys/module/zfs/parameters/zfs_bclone_enabled=1

/sys/module/zfs/parameters/zfs_bclone_wait_dirty=1

- The default values of zfs_bclone_enabled, zfs_bclone_wait_dirty and zfs_dio_enabled are different between OpenZFS 2.x and 2.3

- It would be beneficial if Veeam could back port the V13 behavior around zfs_dio_enabled to a V12 patch release so that both V12 and V13 could work reliably regardless of the zfs_dio_enabled setting.

- Synthetic fulls will not work correctly unless block cloning and block clone wait dirty are enabled as the transformation stage will break or the job will randomly fail in the ways described earlier on in this thread.

- Due to the number of changes and optimisations in place (some of which are specific to Veeam) attempting to support anything earlier than the latest 2.x branch - i.e. 2.2.8 is probably not desirable and preferably just the 2.3 branch to avoid confusion.

- RedHat doesn't officially support ZFS primarily due to a licensing conflict between ZFS and Linux GPL. Rocky is based off the RedHat source code. This means the kernel modules have to be rebuilt under RHEL/clones like Rocky to use ZFS, which means secure boot needs to be disabled which some may consider a security risk. The secure boot issue could potentially be mitigated by Veeam leveraging the Rocky partnership and signing the kernel on the distro Veeam uses to base the JeOS after injecting OpenZFS support. Other distros like Ubuntu don't have the same view on the GPL license risks so ship ZFS with the modules included.

all tests on;

Minimal Rocky9 deployment with full OS patches;

* test1: not latest using DKMS - which results in OpenZFS 2.2.8

disable secure boot

* test2: latest using DKMS - which results in OpenZFS 2.3.4

disable secure boot

My understanding is as follows;

Based on information here;

https://openzfs.github.io/openzfs-docs/ ... .html#dkms

https://openzfs.github.io/openzfs-docs/ ... positories

I've run a variety of tests (see below) and my summary is;

- Not using the testing repository results in version 2.2.8 branch of OpenZFS. By default this does not have block cloning enabled nor block clone wait dirty enabled. The 2.2.8 branch does not support direct IO (DIO). It can be made to work reliably in for Veeam by setting both zfs_bclone_enabled and zfs_bclone_wait_dirty to 1.

- Using the latest testing repository results in version 2.3.4 of OpenZFS. By default zfs_bclone_enabled, zfs_bclone_wait_dirty and zfs_dio_enabled are all set to 1. Having zfs_dio_enabled=1 breaks Veeam V12 integration. Hannes said this particular issue has been resolved in V13.

- If OpenZFS support was elevated in Veeam, it would need to determine a minimum supported version of OpenZFS. If it was say 2.2.4 the following checks would be needed;

1. If target is Veeam V12 and OpenZFS version is >=2.2.4 and <2.3

/sys/module/zfs/parameters/zfs_bclone_enabled=1

/sys/module/zfs/parameters/zfs_bclone_wait_dirty=1

2. If target is Veeam V12 and OpenZFS version is >=2.3

/sys/module/zfs/parameters/zfs_bclone_enabled=1

/sys/module/zfs/parameters/zfs_bclone_wait_dirty=1

/sys/module/zfs/parameters/zfs_dio_enabled=0

3. If target is Veeam V13 and OpenZFS version is >=2.2.4 and <2.3

/sys/module/zfs/parameters/zfs_bclone_enabled=1

/sys/module/zfs/parameters/zfs_bclone_wait_dirty=1

- The default values of zfs_bclone_enabled, zfs_bclone_wait_dirty and zfs_dio_enabled are different between OpenZFS 2.x and 2.3

- It would be beneficial if Veeam could back port the V13 behavior around zfs_dio_enabled to a V12 patch release so that both V12 and V13 could work reliably regardless of the zfs_dio_enabled setting.

- Synthetic fulls will not work correctly unless block cloning and block clone wait dirty are enabled as the transformation stage will break or the job will randomly fail in the ways described earlier on in this thread.

- Due to the number of changes and optimisations in place (some of which are specific to Veeam) attempting to support anything earlier than the latest 2.x branch - i.e. 2.2.8 is probably not desirable and preferably just the 2.3 branch to avoid confusion.

- RedHat doesn't officially support ZFS primarily due to a licensing conflict between ZFS and Linux GPL. Rocky is based off the RedHat source code. This means the kernel modules have to be rebuilt under RHEL/clones like Rocky to use ZFS, which means secure boot needs to be disabled which some may consider a security risk. The secure boot issue could potentially be mitigated by Veeam leveraging the Rocky partnership and signing the kernel on the distro Veeam uses to base the JeOS after injecting OpenZFS support. Other distros like Ubuntu don't have the same view on the GPL license risks so ship ZFS with the modules included.

all tests on;

Minimal Rocky9 deployment with full OS patches;

Code: Select all

# cat /etc/os-release

NAME="Rocky Linux"

VERSION="9.6 (Blue Onyx)"

ID="rocky"

disable secure boot

Code: Select all

# dnf install -y https://zfsonlinux.org/epel/zfs-release-2-8$(rpm --eval "%{dist}").noarch.rpm

# dnf install -y epel-release

# dnf install -y kernel-devel

# dnf install -y zfs

# modprobe zfs

# zfs --version

zfs-2.2.8-1

zfs-kmod-2.2.8-1

# cat /sys/module/zfs/parameters/zfs_bclone_enabled

0

# cat /sys/module/zfs/parameters/zfs_bclone_wait_dirty

0

# cat /sys/module/zfs/parameters/zfs_dio_enabled

cat: /sys/module/zfs/parameters/zfs_dio_enabled: No such file or directory

disable secure boot

Code: Select all

# dnf install -y https://zfsonlinux.org/epel/zfs-release-2-8$(rpm --eval "%{dist}").noarch.rpm

# dnf config-manager --enable zfs-testing

# dnf install -y epel-release

# dnf install -y kernel-devel

# dnf install -y zfs

# modprobe zfs

# zfs --version

zfs-2.3.4-1

zfs-kmod-2.3.4-1

# cat /sys/module/zfs/parameters/zfs_bclone_enabled

1

# cat /sys/module/zfs/parameters/zfs_bclone_wait_dirty

1

# cat /sys/module/zfs/parameters/zfs_dio_enabled

1

-

HannesK

- Product Manager

- Posts: 15896

- Liked: 3564 times

- Joined: Sep 01, 2014 11:46 am

- Full Name: Hannes Kasparick

- Location: Austria

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

Hello,

thanks for the detailed post / summary!

Yes, I would say ZFS 2.3.4 and later in combination with Veeam Backup & Replication 13.0 / 13.0.1 and later is ready for QA testing. While we see in this thread, that older versions work by changing settings, I would only go for 2.3.4 and later for simplicity.

Best regards

Hannes

thanks for the detailed post / summary!

Yes, I would say ZFS 2.3.4 and later in combination with Veeam Backup & Replication 13.0 / 13.0.1 and later is ready for QA testing. While we see in this thread, that older versions work by changing settings, I would only go for 2.3.4 and later for simplicity.

yes, I just added ZFS 2.3.4 on Rocky Linux 9.6 with default settings to the Veeam Software Appliance V13 and it works. No changes needed on any side.Hannes said this particular issue has been resolved in V13.

Best regards

Hannes

-

ashleyw

- Veteran

- Posts: 256

- Liked: 79 times

- Joined: Oct 28, 2010 10:55 pm

- Full Name: Ashley Watson

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

just an update.

I've been running some tests on V13 and ZFS2.3.4 and Rocky9.6 - which we have been running now since we deployed V13 a couple of weeks ago.

There are 2 unresolved issues we've noticed.

1. Bulk deletions are quite slow in ZFS.

During my testing I had to kill large test backups numerous times. Deleting 30+ TB with several hundred files took longer than expected. (in the order of 30 minutes or so). There are some changes around async deletes that can be made, but this issue seems to be more pronounced as soon as block cling enters the mix (which is the default). Slow deletions seem to be a well documented ZFS phenomena. I don't consider this a blocker as this activity is mostly drip fed during Veeam workloads rather than doing mass deletions like I've been doing.

2. Performance of Synthetic Fulls is slower than expected.

As part of my performance testing, I ran an Active Full backup, followed by two incrementals followed by a synthetic full.

Active full duration: 3hr22min, Processed: 43.9TB, Read 38TB, Transferred 21TB, 336 VMs

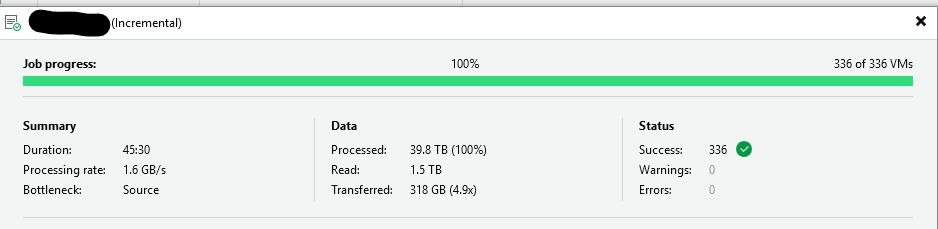

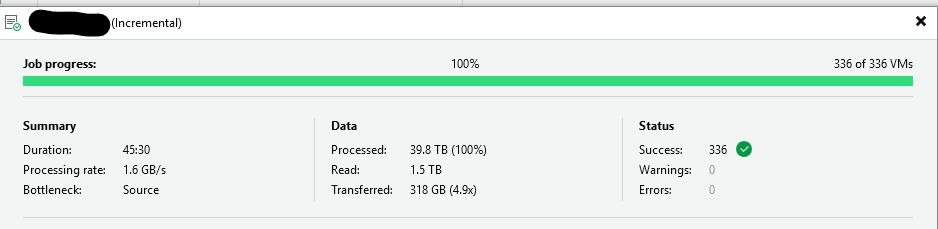

Incremental duration: 45min, Processed: 39.8TB, Read 1.5TB, Transferred 318GB, 336 VMs

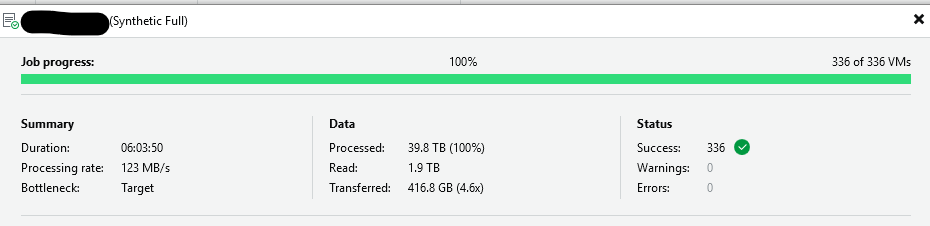

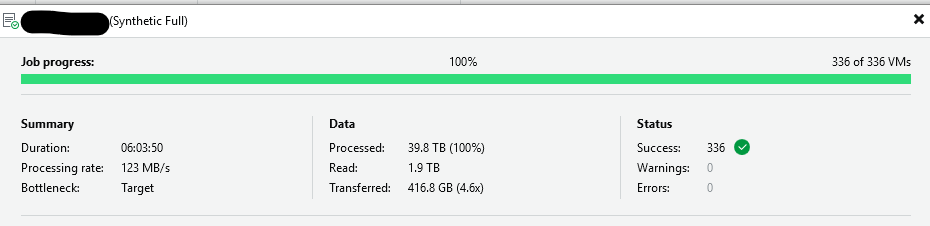

Synthetic full duration: 6hr3min, Processed: 39.8TB, Read 1.9TB, Transferred 416GB, 336 VMs

During a synthetic full cycle, each VM will very quickly have an incremental done on it, and then the the status on the specific VM in the job will change to;

“Creating synthetic full backup (xx% done) [fast clone] ”

I'm assuming that the cloning process duration is related to the number of cloned blocks on the storage repository relating to the VM backup.

out of the 6hr 3min, I'd estimate 5hr 15min of this are while the various VMs are in the middle of the Creating synthetic full backup stage.

Theoretically the performance of the synthetic should be much closer to the performance of the Incremental, as on a Synthetic run, an incremental will first be made, but then the block points should kick in to make the speed of the synthetic full relatively quick after then.

This doesn't appear to be the case, as you can see by the duration, the synthetic full took nearly twice as long as the Active full.

We've reached out to the ZFS team and the initial thoughts are that the BRT (block reference table) that ZFS uses to keep track of the blocks is performing slower than expected due to a possible lack of RAM in the ARC (accelerated read cache). Sadly right now the remediation suggested could be to use a "ZFS Special device" to speed up the BRT activities.

This is not something we have the appetite for at this stage, as the special device itself could become a point of failure and add to the complexity and cost of deployment.

The opinion of Veeam support teams is that XFS using hardware based controllers does not exhibit these issues, so until we have easy workable solutions to these issues, it's not desirable for Veeam to officially support support ZFS.

At this stage I need to do further testing to see if these issues can be addressed through ZFS tuning changes, or if coding/design changes need to be made to ZFS.

Its unlikely the performance drop on Synthetics is Veeam related - but I can't rule that out either given that we are likely one of only a handful of people pushing Veeam workloads to a ZFS target like this.

For our backups, the advantages far outweigh the disadvantages and we have plenty of storage on tap and our backup window time is much greater than the run time, so we are happy to roll with longer than expected synthetic full backup times once per week or so, while the problems can be isolated and fixed.

From our perspective, the critical part of backups is always the reliability and durability - both of which ZFS now excels in from our practical experience.

If anyone has any ideas or has seen these types of issues in other environments supporting blocking cloning/refinks then that would be fantastic to see if there is any correlation.

Shout out to Hannes and the team from Veeam, and also Alex from iXsystems for all there suggestions/help to get this far.

thanks

Ashley

active full

incremental

synthetic full

I've been running some tests on V13 and ZFS2.3.4 and Rocky9.6 - which we have been running now since we deployed V13 a couple of weeks ago.

There are 2 unresolved issues we've noticed.

1. Bulk deletions are quite slow in ZFS.

During my testing I had to kill large test backups numerous times. Deleting 30+ TB with several hundred files took longer than expected. (in the order of 30 minutes or so). There are some changes around async deletes that can be made, but this issue seems to be more pronounced as soon as block cling enters the mix (which is the default). Slow deletions seem to be a well documented ZFS phenomena. I don't consider this a blocker as this activity is mostly drip fed during Veeam workloads rather than doing mass deletions like I've been doing.

2. Performance of Synthetic Fulls is slower than expected.

As part of my performance testing, I ran an Active Full backup, followed by two incrementals followed by a synthetic full.

Active full duration: 3hr22min, Processed: 43.9TB, Read 38TB, Transferred 21TB, 336 VMs

Incremental duration: 45min, Processed: 39.8TB, Read 1.5TB, Transferred 318GB, 336 VMs

Synthetic full duration: 6hr3min, Processed: 39.8TB, Read 1.9TB, Transferred 416GB, 336 VMs

During a synthetic full cycle, each VM will very quickly have an incremental done on it, and then the the status on the specific VM in the job will change to;

“Creating synthetic full backup (xx% done) [fast clone] ”

I'm assuming that the cloning process duration is related to the number of cloned blocks on the storage repository relating to the VM backup.

out of the 6hr 3min, I'd estimate 5hr 15min of this are while the various VMs are in the middle of the Creating synthetic full backup stage.

Theoretically the performance of the synthetic should be much closer to the performance of the Incremental, as on a Synthetic run, an incremental will first be made, but then the block points should kick in to make the speed of the synthetic full relatively quick after then.

This doesn't appear to be the case, as you can see by the duration, the synthetic full took nearly twice as long as the Active full.

We've reached out to the ZFS team and the initial thoughts are that the BRT (block reference table) that ZFS uses to keep track of the blocks is performing slower than expected due to a possible lack of RAM in the ARC (accelerated read cache). Sadly right now the remediation suggested could be to use a "ZFS Special device" to speed up the BRT activities.

This is not something we have the appetite for at this stage, as the special device itself could become a point of failure and add to the complexity and cost of deployment.

The opinion of Veeam support teams is that XFS using hardware based controllers does not exhibit these issues, so until we have easy workable solutions to these issues, it's not desirable for Veeam to officially support support ZFS.

At this stage I need to do further testing to see if these issues can be addressed through ZFS tuning changes, or if coding/design changes need to be made to ZFS.

Its unlikely the performance drop on Synthetics is Veeam related - but I can't rule that out either given that we are likely one of only a handful of people pushing Veeam workloads to a ZFS target like this.

For our backups, the advantages far outweigh the disadvantages and we have plenty of storage on tap and our backup window time is much greater than the run time, so we are happy to roll with longer than expected synthetic full backup times once per week or so, while the problems can be isolated and fixed.

From our perspective, the critical part of backups is always the reliability and durability - both of which ZFS now excels in from our practical experience.

If anyone has any ideas or has seen these types of issues in other environments supporting blocking cloning/refinks then that would be fantastic to see if there is any correlation.

Shout out to Hannes and the team from Veeam, and also Alex from iXsystems for all there suggestions/help to get this far.

thanks

Ashley

active full

incremental

synthetic full

-

travisll

- Lurker

- Posts: 1

- Liked: 1 time

- Joined: Jun 27, 2012 9:46 pm

- Full Name: Travis Llewellyn

- Contact:

Re: OpenZFS 2.2 support for reflinks now available

Ashley,

We are testing Ubuntu and ZFS repos for both primary and seconday backups.

Our tests so afar have been really consistent, until I added a 96TB backup of a windows failover cluster using Windows Agent Backup, even then the primary copy has been working great, this issue that has come up now is the backup copy to our remote site (10GB dedicated circuit), we have not been able to complete a synthetic full without the server crashing with and out of memory error.

We think that it could be the ubuntu setup on ther remote and are going to replace it with a new box with much more memory.

Once I have that in place I will have more to be able to update you on.

I will post some stats in a few

We are testing Ubuntu and ZFS repos for both primary and seconday backups.

Our tests so afar have been really consistent, until I added a 96TB backup of a windows failover cluster using Windows Agent Backup, even then the primary copy has been working great, this issue that has come up now is the backup copy to our remote site (10GB dedicated circuit), we have not been able to complete a synthetic full without the server crashing with and out of memory error.

We think that it could be the ubuntu setup on ther remote and are going to replace it with a new box with much more memory.

Once I have that in place I will have more to be able to update you on.

I will post some stats in a few

Who is online

Users browsing this forum: No registered users and 88 guests