Most of the time we see an increase in processing speed of at least 25% to a max. of 50%.

But on the most recent upgrade it was a decrease - so we studied the process there thoroughly:

It is a direct SAN access based proxy (which also is correctly used as SAN according to the log). The proxy has 8 cores (Xeon Westmere) at 2.8 GHz and should be able to cope with parallel processing.

Parallel processing is switched on, compression tuned to the new "optimal" setting and proxy and repository, which is locally attached array of 24 disks (!).

Running under V7 the job states to be proxy and sometimes network (!) limited. There should be no network traffic at all, as we read directly from SAN and write to a local disk.

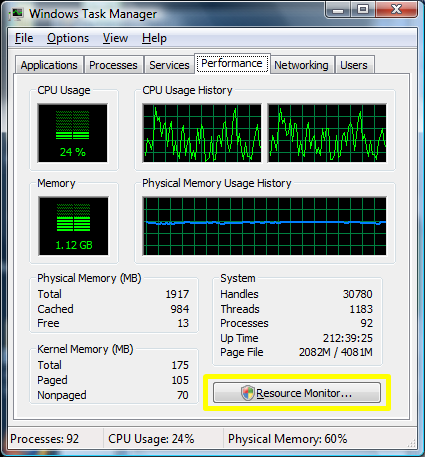

In the task manager one can see an almoust fully saturated network (1GBit) and a fully saturated CPU. Both we didn't expect.

When looking into Windows' ressource monitor during the backup, one can see 5 Veeam Agents.

One is listed as VeeamAgent64.EXE and four others are named VeeamAgent.EXE.

It seems only the VeeamAgant64.EXE is reveiving data via Netzwork but the four other agents send data via network (->ressource monitor).

Is this by design or is B&R here falsely routing the traffic out an in again?

Thanks for any ideas.