-

crun

- Novice

- Posts: 9

- Liked: 1 time

- Joined: Oct 19, 2022 12:45 pm

- Contact:

Re: Recommendations for backup storage, backup target

thanks for the input Jorge. Yeah, DD is our capacity tier. Archive is covered too. I will look into HPE Apollo and ask HPE about possible bottlenecks too (and some U.3/NVMe doubts I have)

I did not see too many posts about all-flash (or SAS NVMe) as performance storage, but the virtual labs, instant restore or large DR restores should see a lot benefit here I think

I did not see too many posts about all-flash (or SAS NVMe) as performance storage, but the virtual labs, instant restore or large DR restores should see a lot benefit here I think

-

LickABrick

- Enthusiast

- Posts: 67

- Liked: 31 times

- Joined: Dec 23, 2019 7:26 pm

- Full Name: Lick A Brick

- Contact:

Re: Recommendations for backup storage, backup target

Any reason to not use a Linux OS and make use of immutability? RHEL / Ubuntu is under supported OS'es for the HPE Apollojorgedlcruz wrote: ↑Oct 20, 2022 6:14 am

If I were to buyand use HPE I would very much use HPE Apollo, and leverage the great Architecture References that they are out there.

Thanks!

-

tsightler

- VP, Product Management

- Posts: 6053

- Liked: 2874 times

- Joined: Jun 05, 2009 12:57 pm

- Full Name: Tom Sightler

- Contact:

Re: Recommendations for backup storage, backup target

I've seen a few cases where customers with deep pockets and have used all-flash, SAN based primary storage systems for their first-tier repositories, but rarely have I seen someone try to build such storage in a standalone server (I can't actually think of a recent case). Certainly, RAID controller performance is likely to be an issue from a throughput perspective. While I haven't personally tested it myself, there have been quite a few reports of users giving it a spin with the common RAID cards and performance for most seems to top out around 8-10GB/s. I mean, this is fast, but not much faster than what the storage can already do from a throughput perspective (it's not uncommon for an Apollo full of spinning drives to be able to get 5GB/s throughput). Now, we're talking about sequential throughput here, so I'm not saying that NVMe wouldn't still have a huge advantage, operations like synthetic fulls, backup copies, FLRs, instant restores, etc, would all be highly accelerated due to the much lower latency during random operations, and that can actually have an even bigger impact than overall write throughput, which is generally "fast enough" anyway.

As Jorge mentioned, there are some new vendors out there that are pushing GPU based RAID controllers that have broken the 100MB/s barrier for RAID with NVMe, which is just crazy, but I haven't personally seen them in any major vendor offering so far, however, I'm of course not fully aware on each vendors current offerings, it's based mostly on what I've seen delivered to the field so far. I would also recommend working closely with your vendor for a validated configuration.

-

dasfliege

- Service Provider

- Posts: 335

- Liked: 70 times

- Joined: Nov 17, 2014 1:48 pm

- Full Name: Florin

- Location: Switzerland

- Contact:

Re: Recommendations for backup storage, backup target

We are building a new datacenter and are looking for backup storage options since quite some time but haven't found the perfect solution so far.

In our current datacenter we have round about 100TB source data in two different sites and store the backups to two NetApp E2860 SAN with 480TB of usable space each. So our current usable backup space is almost 1PB and it's quite full already.

We use ReFS with blockclone for our primary backup repository and XFS with blockclone for our GFS/CopyJob repository. All FULL- and GFS backups are Synthetic. We are never performing active FULL. We store 52 weekly restorepoints.

This solution is working quite okay for our current environement, but we also have serveral other devices (Synology/Supermicro) where we are storing M365 and CloudConnect backups for other customers. In our new datacenter design, we would like to have these backups stored on the same repository as all our other backups. Also we would like to increase the backup time from 1 per day to at least 3 per day. This will increase the pressure on the repository for sure.

Our budget isn't unlimited, so we are looking for a reliable enterprise solution which still has a good value to cost factor. We already evaluated dedup storages like Huawei OceanProtect, but don't really consider that as a good alternative to a well sized ReFS/XFS based solution as all dedup appliance basically forces you to perform active full backups. In order to don't overstress our primary storage, we don't want to perform active fulls every week if it is not absolutelly necessary.

For our new datacenter, we need the double amount of usable capacity, so 2PB stretched to two sites/storage systems. We are considering S3 storage as well, as we would like to have "real" immutable backups. We are already leveraging XFS immutable backups in our current setup. But as this is only a timestamp-based immutability, i don't consider them as "real" immutable.

I wanted to ask if there are people having to deal with a similar environement and similar requirements and are running setups which work just well, have a good cost/value factor and don't need an entire rack to store the hardware? I think there is no way around having flash storage at least for the primary backups if we want to increase the backup schedule. On the other hand i don't think that we absolutely need flash for our long term retention repository. We have already evaluated serveral systems from Huawei, NetApp, HPE, Supermicro and Scality (S3), but haven't found exactly what we are looking for for the price we are willing to pay. What design's are you guys running?

In our current datacenter we have round about 100TB source data in two different sites and store the backups to two NetApp E2860 SAN with 480TB of usable space each. So our current usable backup space is almost 1PB and it's quite full already.

We use ReFS with blockclone for our primary backup repository and XFS with blockclone for our GFS/CopyJob repository. All FULL- and GFS backups are Synthetic. We are never performing active FULL. We store 52 weekly restorepoints.

This solution is working quite okay for our current environement, but we also have serveral other devices (Synology/Supermicro) where we are storing M365 and CloudConnect backups for other customers. In our new datacenter design, we would like to have these backups stored on the same repository as all our other backups. Also we would like to increase the backup time from 1 per day to at least 3 per day. This will increase the pressure on the repository for sure.

Our budget isn't unlimited, so we are looking for a reliable enterprise solution which still has a good value to cost factor. We already evaluated dedup storages like Huawei OceanProtect, but don't really consider that as a good alternative to a well sized ReFS/XFS based solution as all dedup appliance basically forces you to perform active full backups. In order to don't overstress our primary storage, we don't want to perform active fulls every week if it is not absolutelly necessary.

For our new datacenter, we need the double amount of usable capacity, so 2PB stretched to two sites/storage systems. We are considering S3 storage as well, as we would like to have "real" immutable backups. We are already leveraging XFS immutable backups in our current setup. But as this is only a timestamp-based immutability, i don't consider them as "real" immutable.

I wanted to ask if there are people having to deal with a similar environement and similar requirements and are running setups which work just well, have a good cost/value factor and don't need an entire rack to store the hardware? I think there is no way around having flash storage at least for the primary backups if we want to increase the backup schedule. On the other hand i don't think that we absolutely need flash for our long term retention repository. We have already evaluated serveral systems from Huawei, NetApp, HPE, Supermicro and Scality (S3), but haven't found exactly what we are looking for for the price we are willing to pay. What design's are you guys running?

-

Gostev

- former Chief Product Officer (until 2026)

- Posts: 33084

- Liked: 8179 times

- Joined: Jan 01, 2006 1:01 am

- Location: Baar, Switzerland

- Contact:

Re: Recommendations for backup storage, backup target

Immutability on object storage does not work any differently from Veeam hardened repositories.dasfliege wrote: ↑Jan 10, 2023 2:30 pmFor our new datacenter, we need the double amount of usable capacity, so 2PB stretched to two sites/storage systems. We are considering S3 storage as well, as we would like to have "real" immutable backups. We are already leveraging XFS immutable backups in our current setup. But as this is only a timestamp-based immutability, i don't consider them as "real" immutable.

The only "real" immutable backups are the ones you put on WORM media such as WORM tapes, where you're physically unable to delete data once it has been written. ALL other immutability implementations are software-based locks relying on timestamps, with data stored on normal writeable media.

-

dasfliege

- Service Provider

- Posts: 335

- Liked: 70 times

- Joined: Nov 17, 2014 1:48 pm

- Full Name: Florin

- Location: Switzerland

- Contact:

Re: Recommendations for backup storage, backup target

I agree that both are just software implementations and that it is not compareable to a real airgapped backup (which we do have as well, as we write everything to tape once a week).

But according to everything i've heard so far, S3 object-lock still is a little harder to get around then just set the linux OS date to some point in the future. Correct me if i'm wrong.

Apart from the object-lock functionality, is it worth to have an S3 compatible repository for either short or long term backups? Is there any benefit compared to a classic ReFS or XFS repo? I would be interested in an answer to this for classical VM backups, as well as for M365 backups, as we do both.

But according to everything i've heard so far, S3 object-lock still is a little harder to get around then just set the linux OS date to some point in the future. Correct me if i'm wrong.

Apart from the object-lock functionality, is it worth to have an S3 compatible repository for either short or long term backups? Is there any benefit compared to a classic ReFS or XFS repo? I would be interested in an answer to this for classical VM backups, as well as for M365 backups, as we do both.

-

Gostev

- former Chief Product Officer (until 2026)

- Posts: 33084

- Liked: 8179 times

- Joined: Jan 01, 2006 1:01 am

- Location: Baar, Switzerland

- Contact:

Re: Recommendations for backup storage, backup target

That's just marketing... absolutely no difference: as soon as the appliance has been breached and privileges elevated to root, you're done whether it is an object storage appliance or a hardened repository.

However, where hardened repositories run circles around object storage appliances is specifically the attack surface. Object storage appliances will always have an infinitely bigger attack surface due to the presence of web UI for remote management. On the other hand, properly deployed hardened repositories will only have local (physical console) access.

The big benefit of object storage appliances is not added security, but the fact that they are "plug and play" and are thus easier to manage (which comes specifically at the cost of bigger attack surfaces). Nevertheless, I would expect 80% of customers to choose an object storage appliance for simplicity (not just management but also CPU/RAM sizing, storage configuration and so on). Even if one can get MUCH bigger bang for the buck with hardened repos, these are only good for people with some level of Linux expertise. I think this thread provides an excellent summary (read my response first, then the first post of the topic starter). BTW it is best we move further "versus" discussion there

However, where hardened repositories run circles around object storage appliances is specifically the attack surface. Object storage appliances will always have an infinitely bigger attack surface due to the presence of web UI for remote management. On the other hand, properly deployed hardened repositories will only have local (physical console) access.

The big benefit of object storage appliances is not added security, but the fact that they are "plug and play" and are thus easier to manage (which comes specifically at the cost of bigger attack surfaces). Nevertheless, I would expect 80% of customers to choose an object storage appliance for simplicity (not just management but also CPU/RAM sizing, storage configuration and so on). Even if one can get MUCH bigger bang for the buck with hardened repos, these are only good for people with some level of Linux expertise. I think this thread provides an excellent summary (read my response first, then the first post of the topic starter). BTW it is best we move further "versus" discussion there

-

LickABrick

- Enthusiast

- Posts: 67

- Liked: 31 times

- Joined: Dec 23, 2019 7:26 pm

- Full Name: Lick A Brick

- Contact:

Re: Recommendations for backup storage, backup target

Having a Object Storage repo is pretty easy to maintain. No updates (OS, security, software, ...), no need to upgrade or maintain hardware, no need to worry about expanding storage space.

These are all things that take up time (and require knowledge about certain things), and time costs your company/customer money.

S3 Immutability is software based, but you pretty much have to hack the hosting provider of your S3 storage to delete the files from there.

These are all things that take up time (and require knowledge about certain things), and time costs your company/customer money.

S3 Immutability is software based, but you pretty much have to hack the hosting provider of your S3 storage to delete the files from there.

-

Gostev

- former Chief Product Officer (until 2026)

- Posts: 33084

- Liked: 8179 times

- Joined: Jan 01, 2006 1:01 am

- Location: Baar, Switzerland

- Contact:

Re: Recommendations for backup storage, backup target

Here we're talking about on-prem storage options and specifically about S3-compatible object storage appliances... not about cloud object storage providers.

-

dasfliege

- Service Provider

- Posts: 335

- Liked: 70 times

- Joined: Nov 17, 2014 1:48 pm

- Full Name: Florin

- Location: Switzerland

- Contact:

Re: Recommendations for backup storage, backup target

Thx for the clarification and your opinions. I will join the discussion in the other thread for sure

But back to my original question about storage solutions for our scenario. As far as i understand, there is basically nothing better in terms of "bang for the buck" then XFS repos a the moment, as long as you have the skills to build and maintain it? As we already have XFS repos now and they perform quite well, i guess we consider this as the way to go as well for out future setup.

So the question would only be, where do we store the data. Apollo 4500 my be the best option but does it scale? As far as i know it's limited to 1.2PB RAW storage with 60*20TB NL-SAS disks. If we don't go with a full flash system, we definately would want to store the primary backups on flash. Would it be possible to have the apollo in a mixed configuration with SSD and NL-SAS? Has anyone any experience with such a setup?

Do you guys know any other product then NetApp E-Series which is just focusing on pretty simple but fast and cheap block storage? Guess that would be the only alternative to an Apollo when it comes to "only" host some XFS repos.

But back to my original question about storage solutions for our scenario. As far as i understand, there is basically nothing better in terms of "bang for the buck" then XFS repos a the moment, as long as you have the skills to build and maintain it? As we already have XFS repos now and they perform quite well, i guess we consider this as the way to go as well for out future setup.

So the question would only be, where do we store the data. Apollo 4500 my be the best option but does it scale? As far as i know it's limited to 1.2PB RAW storage with 60*20TB NL-SAS disks. If we don't go with a full flash system, we definately would want to store the primary backups on flash. Would it be possible to have the apollo in a mixed configuration with SSD and NL-SAS? Has anyone any experience with such a setup?

Do you guys know any other product then NetApp E-Series which is just focusing on pretty simple but fast and cheap block storage? Guess that would be the only alternative to an Apollo when it comes to "only" host some XFS repos.

-

Gostev

- former Chief Product Officer (until 2026)

- Posts: 33084

- Liked: 8179 times

- Joined: Jan 01, 2006 1:01 am

- Location: Baar, Switzerland

- Contact:

Re: Recommendations for backup storage, backup target

I personally see no reason to go flash for backup repositories in a world where even two years ago, a single all-in-one Veeam appliance based on an HPE Apollo server was already able to deliver 11GB/s backup throughput. Do you really need more than that to fit the backup window in your environment?

Unless of course I was a heavy DataLabs feature user and needed to constantly run many VMs from backups for various production use cases like Dev/Test.

Unless of course I was a heavy DataLabs feature user and needed to constantly run many VMs from backups for various production use cases like Dev/Test.

-

dasfliege

- Service Provider

- Posts: 335

- Liked: 70 times

- Joined: Nov 17, 2014 1:48 pm

- Full Name: Florin

- Location: Switzerland

- Contact:

Re: Recommendations for backup storage, backup target

It's not only the throughput that matters but also the ability to run parallel tasks, f.e. when you want to perform a restore while there are backups running to the same storage.

As a service provider, we have to guarantee a specific RTO/RPO to our customer and therefor have to make sure that their backups are running 24/7/365 according to their schedule. This should not be impacted even though we do perform copy jobs to a second destination on a daily base and copy to tape on a weekly base, which both but pressure on the storage as well and locks the backup files for the duration of the process, so the duration has to be kept to a minimum. We also have to guarantee that we can execute restores at any time, even though there are other tasks running on the repository. That won't work out with non-flash systems and 100TB+ of source data.

Synthetic operations don't need much throughput as well. But they heavily rely on good IOPS performance of the storage. So if you have many synthetic operations running at the same time (we do have 50 jobs running more or less at the same time), EVERY storage system with rotating disks will be at it's limit.

Also we would like to store our M365 backups on the same storage system and i am having a case open with veeam Support at the moment where we are troubleshooting poor restore performance of exchange mailboxes to a local PST file. Even though our actual storage is able to deliver a sequential throughput of over 300MB/s, we only restore with around 5MB/s. It would take weeks to restore a complete organization with that poor performance. We don't know yet if it is a problem of the JET db or something else, but fact is, that it is much faster when restoring from SSD based storage. So as long as we have to guarantee a certain restore-rate in case of an emergency to our customers, i doubt that there is any way around having the M365 backups on flash storage at least.

As a service provider, we have to guarantee a specific RTO/RPO to our customer and therefor have to make sure that their backups are running 24/7/365 according to their schedule. This should not be impacted even though we do perform copy jobs to a second destination on a daily base and copy to tape on a weekly base, which both but pressure on the storage as well and locks the backup files for the duration of the process, so the duration has to be kept to a minimum. We also have to guarantee that we can execute restores at any time, even though there are other tasks running on the repository. That won't work out with non-flash systems and 100TB+ of source data.

Synthetic operations don't need much throughput as well. But they heavily rely on good IOPS performance of the storage. So if you have many synthetic operations running at the same time (we do have 50 jobs running more or less at the same time), EVERY storage system with rotating disks will be at it's limit.

Also we would like to store our M365 backups on the same storage system and i am having a case open with veeam Support at the moment where we are troubleshooting poor restore performance of exchange mailboxes to a local PST file. Even though our actual storage is able to deliver a sequential throughput of over 300MB/s, we only restore with around 5MB/s. It would take weeks to restore a complete organization with that poor performance. We don't know yet if it is a problem of the JET db or something else, but fact is, that it is much faster when restoring from SSD based storage. So as long as we have to guarantee a certain restore-rate in case of an emergency to our customers, i doubt that there is any way around having the M365 backups on flash storage at least.

-

Gostev

- former Chief Product Officer (until 2026)

- Posts: 33084

- Liked: 8179 times

- Joined: Jan 01, 2006 1:01 am

- Location: Baar, Switzerland

- Contact:

Re: Recommendations for backup storage, backup target

Honest question: when talking about image-level backups, is that your personal experience or an assumption? Asking because when these "monster storage servers" first appeared about 8 years ago, with Cisco C3260 being the first if I remember correctly, we very quickly had some of our largest service providers at a time storing PBs of customers data on these very servers filled with HDDs. And their feedback was exceptional, which made me start recommending this approach confidently also to enterprise customers.

Besides, considering how much storage and fabric technologies progressed since then, I'd expect even faster performance from such storage servers these days...

-

dasfliege

- Service Provider

- Posts: 335

- Liked: 70 times

- Joined: Nov 17, 2014 1:48 pm

- Full Name: Florin

- Location: Switzerland

- Contact:

Re: Recommendations for backup storage, backup target

It's an assumption i am making based on the experience we've made with our two NetApp E2860 and based on physics of a rotating disk. I never heard of any bottleneck when just writing sequential data to an array of 60 disks, but as soon as it comes to simultanous read/write operations, they will suffer heavily. Just measuring throughput and backup speed of image level backups to an array is not a benchmark which is anywhere near a real-live scenario if you want to provide a reliable and redundant backup AND restore solution at any time.

I highly doubt that an Apollo 4500 is able to do more magic with it's 60 disks then a E2860, especially in terms of IOPS. And based on my experience, the E2860 controllers are beating the sh** out of it's 60 disks when it is under heavy r/w load.

I may be wrong with my assumption and the Apollo with HDD may be the solution for every scenario, but even with rotating disks, it's quite expensive to just try it out and (maybe) fail. That's why i was asking for real-live experiences with similar setups as we have.

If you are talking about customers writing PBs of data to Apollo-like systems years ago. How many of them did they run in parallel? Today the max RAW capacity for a 4500 is 1.2PB, so i am sure they had plenty of them years ago when there were no 20TB disks. I'm just asking because it would make a big difference if someone is running like 3 Apollos as performance tier in a SOBR (180 disks) compared to our plan, which is running one single Apollo (60 disks) in each of our datacenters. The used raid config would be of interest as well.

I highly doubt that an Apollo 4500 is able to do more magic with it's 60 disks then a E2860, especially in terms of IOPS. And based on my experience, the E2860 controllers are beating the sh** out of it's 60 disks when it is under heavy r/w load.

I may be wrong with my assumption and the Apollo with HDD may be the solution for every scenario, but even with rotating disks, it's quite expensive to just try it out and (maybe) fail. That's why i was asking for real-live experiences with similar setups as we have.

If you are talking about customers writing PBs of data to Apollo-like systems years ago. How many of them did they run in parallel? Today the max RAW capacity for a 4500 is 1.2PB, so i am sure they had plenty of them years ago when there were no 20TB disks. I'm just asking because it would make a big difference if someone is running like 3 Apollos as performance tier in a SOBR (180 disks) compared to our plan, which is running one single Apollo (60 disks) in each of our datacenters. The used raid config would be of interest as well.

-

crun

- Novice

- Posts: 9

- Liked: 1 time

- Joined: Oct 19, 2022 12:45 pm

- Contact:

Re: Recommendations for backup storage, backup target

In case anyone wonders if HPE DL380 filled with NVMe (RAID6) is actually fast. Well, we get 600MB/s per-stream restore (hotadd) blazing speedscrun wrote: ↑Oct 19, 2022 1:26 pm Hi

We are planning a fast short-term performance storage for the primary backup. Without diving into details, does immutable SOBR of (3-4) servers (e.g. HPE ProLiant DL380) with each 24x NVMe RAID6 sound resonable?

I assume NVMe would basically negate the write performance impact of RAID6 and RAID60 would be overkill? Or the raid controller would not be able to keep up and bottleneck drives?

-

bct44

- Veeam Software

- Posts: 184

- Liked: 42 times

- Joined: Jul 28, 2022 12:57 pm

- Contact:

Re: Recommendations for backup storage, backup target

Hello,

I'm running full rack of Apollo GEN 10 4210 (4U) with 16TB HDD since two years on linux in production. I didn't check IOPS but from FIO and diskspd4linux (veeam parameters) i can achieve 2GB/s per raid on read & write. My current problem is I saturate the network with 100gbps sometimes during the backup windows.

Read & Write Performances always depend of the real world scenario, let's take the most perfect scenario i met for Apollo repo => Exchanger servers nodes on physical host so sequential to sequential repo.

ActiveFull jobs for 2 Apollo nodes so 4 raid controller was around 6-8GB/S.

Restore big Files 3-4GB/S, the bottleneck was the primary storage.

Raid controller => StripSize 256k,90% write 10% read.

If you have unlimited budget for the performance tier, why not full fash on array...

@dasfliege: Could you share some results of fio or diskspd test on an array from NAPP 2860? It could be interesting to compare from the Apollo thread.

I'm running full rack of Apollo GEN 10 4210 (4U) with 16TB HDD since two years on linux in production. I didn't check IOPS but from FIO and diskspd4linux (veeam parameters) i can achieve 2GB/s per raid on read & write. My current problem is I saturate the network with 100gbps sometimes during the backup windows.

Read & Write Performances always depend of the real world scenario, let's take the most perfect scenario i met for Apollo repo => Exchanger servers nodes on physical host so sequential to sequential repo.

ActiveFull jobs for 2 Apollo nodes so 4 raid controller was around 6-8GB/S.

Restore big Files 3-4GB/S, the bottleneck was the primary storage.

Raid controller => StripSize 256k,90% write 10% read.

If you have unlimited budget for the performance tier, why not full fash on array...

@dasfliege: Could you share some results of fio or diskspd test on an array from NAPP 2860? It could be interesting to compare from the Apollo thread.

Bertrand / TAM EMEA

-

dasfliege

- Service Provider

- Posts: 335

- Liked: 70 times

- Joined: Nov 17, 2014 1:48 pm

- Full Name: Florin

- Location: Switzerland

- Contact:

Re: Recommendations for backup storage, backup target

@bct44

Sorry for my late reply. Just stumbled across this old thread, as we're still in the process of evaluating a new backup storage. In the meantime we've extended our NetApps to 180 disks each (1.5PB), which is the max supported.

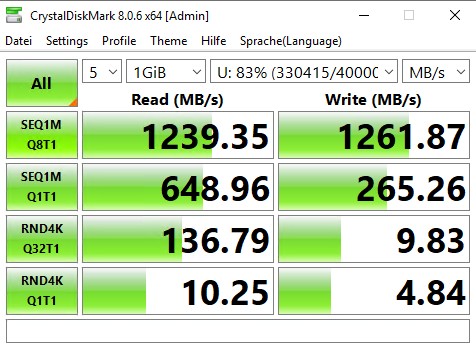

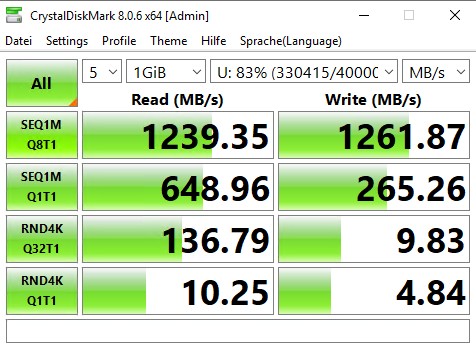

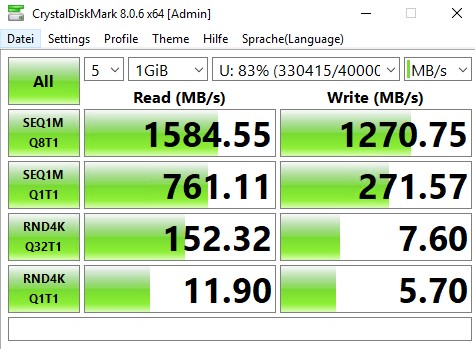

You did not say what exact parameters you used for your tests and therefor it's hard to compare, as these could make a hell of a difference. For your reference, i am sharing a recent CrystalDiskMark test i've run on our HPE host attached via 10Gbit to the NetApp. A simple sequential test maxes out at 1250MB/s, which is the maximum a 10Gbit link can deliver. But as i wrote before, sequential tests are nowhere near a real scenario, unless you're really just writing infinite incrementals to your storage without performing synthetic operations, restores, copys, tape-outs or anything else which is reading data while your're writing at the same time. As soon as it comes to random IO, spinning disks are just crap and it would be interesting if you Apollo is performing any better in this (realistic) scenario:

Edit: Just seen that i had a tapejob running at the time i performed the test, so real values may be higher. I will re-take the test when the storage is idle, if it's of any interest.

Sorry for my late reply. Just stumbled across this old thread, as we're still in the process of evaluating a new backup storage. In the meantime we've extended our NetApps to 180 disks each (1.5PB), which is the max supported.

You did not say what exact parameters you used for your tests and therefor it's hard to compare, as these could make a hell of a difference. For your reference, i am sharing a recent CrystalDiskMark test i've run on our HPE host attached via 10Gbit to the NetApp. A simple sequential test maxes out at 1250MB/s, which is the maximum a 10Gbit link can deliver. But as i wrote before, sequential tests are nowhere near a real scenario, unless you're really just writing infinite incrementals to your storage without performing synthetic operations, restores, copys, tape-outs or anything else which is reading data while your're writing at the same time. As soon as it comes to random IO, spinning disks are just crap and it would be interesting if you Apollo is performing any better in this (realistic) scenario:

Edit: Just seen that i had a tapejob running at the time i performed the test, so real values may be higher. I will re-take the test when the storage is idle, if it's of any interest.

-

dasfliege

- Service Provider

- Posts: 335

- Liked: 70 times

- Joined: Nov 17, 2014 1:48 pm

- Full Name: Florin

- Location: Switzerland

- Contact:

Re: Recommendations for backup storage, backup target

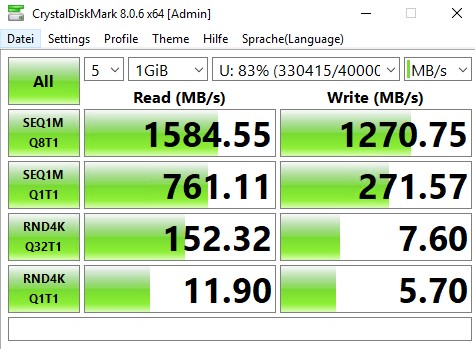

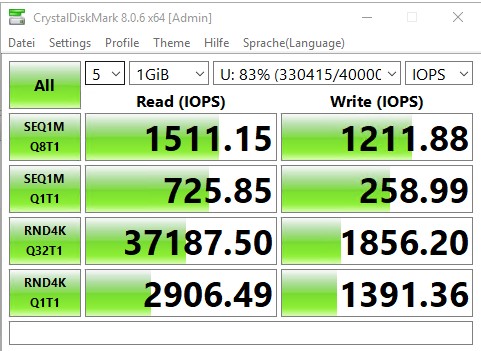

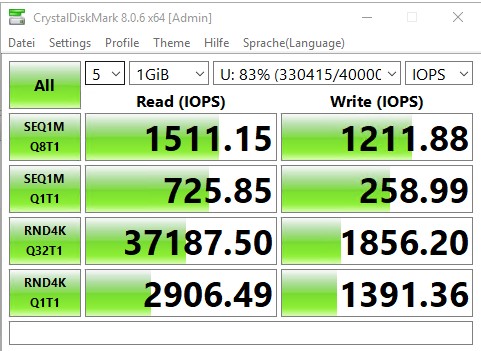

Reperformed test on idle storage:

Throughput:

IOPS:

Throughput:

IOPS:

Who is online

Users browsing this forum: AdsBot [Google], Bing [Bot], Google [Bot] and 77 guests